filmov

tv

Linear discriminant analysis (LDA) - simply explained

Показать описание

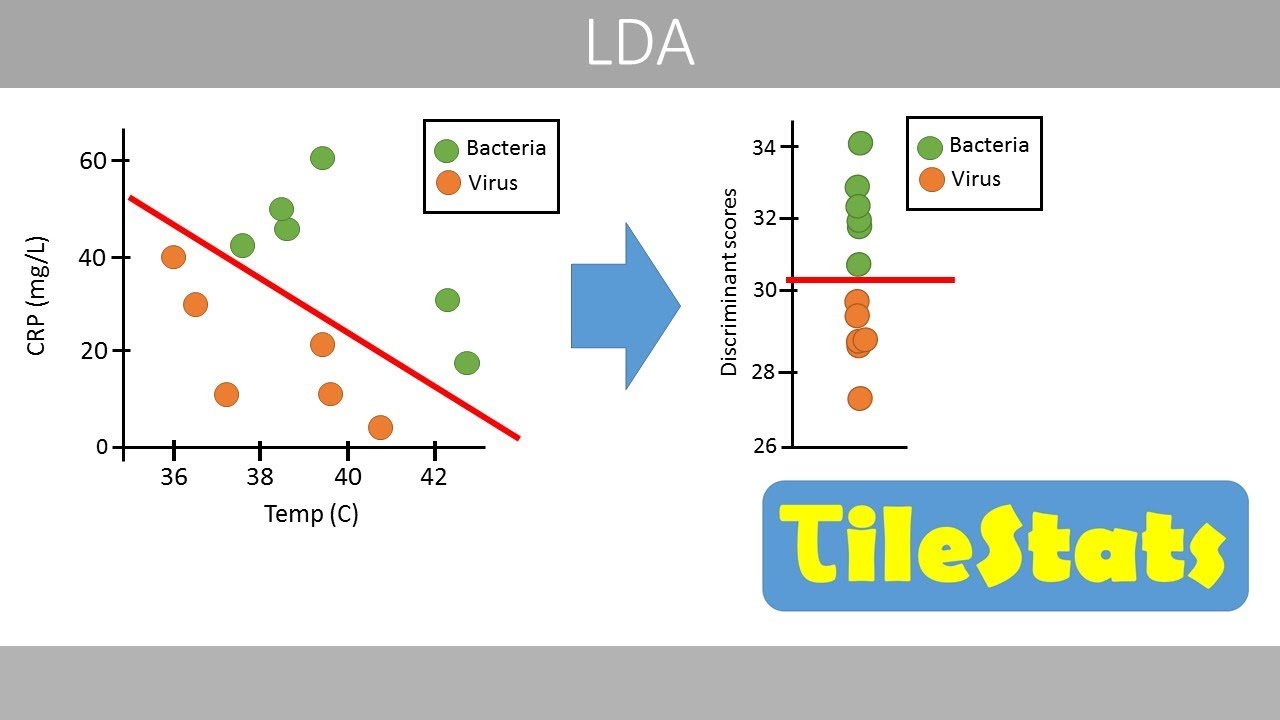

In this video, we will see how we can use LDA to combine variables to predict if someone has a viral or bacterial infection. We will also compare LDA and PCA (07:50), discuss separation (11:50), the math behind LDA (14:40), and how to calculate the standardized coefficients (21:00).

Linear discriminant analysis (LDA) - simply explained

Understand Linear Discriminant Analysis (LDA) in 4 minutes !

Linear Discriminant Analysis | LDA | Fisher Discriminant Analysis | FDA Explained by Mahesh Huddar

Linear discriminant analysis explained | LDA algorithm in python | LDA algorithm explained

LDA Solved Example | Linear Discriminant Analysis | Fisher Discriminant Analysis by Mahesh Huddar

Machine Learning 3.2 - Linear Discriminant Analysis (LDA) and Quadratic Discriminant Analysis (QDA)

Lecture 20- Linear Discriminant Analysis ( LDA) (with Solved Example)

Linear Discriminant Analysis (LDA) as Dimensionality Reduction Technique

LDA (Linear Discriminant Analysis) In Python - ML From Scratch 14 - Python Tutorial

[07] Linear Discriminant Analysis (LDA)

Machine Learning: What is Discriminant Analysis?

Linear discriminant analysis LDA example with complete solution | Machine learning | Data mining

Linear Discriminant Analysis | LDA in Machine Learning | LDA Theory | Satyajit Pattnaik

Linear Discriminant Analysis - LDA

STAT115 Chapter 7.4 Linear Discriminant Analysis (LDA)

Machine Learning | Linear Discriminant Analysis

Lecture 19 : Linear Discriminant Analysis

Linear Discriminant Analysis (LDA) vs Principal Component Analysis (PCA)

Introduction to Linear Discriminant Analysis (LDA)

What is Linear Discriminant Analysis (LDA) In Machine Learning?

Linear Discriminant Analysis (LDA) made easy

Linear discriminant analysis (LDA) - how to use it as a classifier

Introduction to Machine Learning - 06 - Linear discriminant analysis

Lec 28: Linear Discriminant Analysis (LDA) (Part I)

Комментарии

0:24:26

0:24:26

0:04:21

0:04:21

0:08:33

0:08:33

0:15:32

0:15:32

0:13:54

0:13:54

0:17:59

0:17:59

0:11:45

0:11:45

0:04:32

0:04:32

0:22:13

0:22:13

![[07] Linear Discriminant](https://i.ytimg.com/vi/jaScCtv-xiM/hqdefault.jpg) 0:49:26

0:49:26

0:02:50

0:02:50

0:07:02

0:07:02

0:31:51

0:31:51

0:07:46

0:07:46

0:16:44

0:16:44

0:28:04

0:28:04

0:34:58

0:34:58

0:03:42

0:03:42

0:07:09

0:07:09

0:02:49

0:02:49

0:20:24

0:20:24

0:23:46

0:23:46

1:00:07

1:00:07

0:57:13

0:57:13