filmov

tv

Machine Learning 3.2 - Linear Discriminant Analysis (LDA) and Quadratic Discriminant Analysis (QDA)

Показать описание

We will cover classification models in which we estimate the probability distributions for the classes. We can then compute the likelihood of each class for a new observation, and then assign the new observation to the class with the greatest likelihood. These maximum likelihood methods, such as the LDA and QDA methods you will see in this section, are often the best methods to use on data whose classes are well-approximated by standard probability distributions.

Linear Regression in 2 minutes

Artificial Intelligence & Machine learning 3 - Linear Classification | Stanford CS221 (Autumn 20...

Artificial Intelligence & Machine Learning 2 - Linear Regression | Stanford CS221: AI (Autumn 20...

Machine Learning Tutorial 2 - Linear Regression - Machine Learning

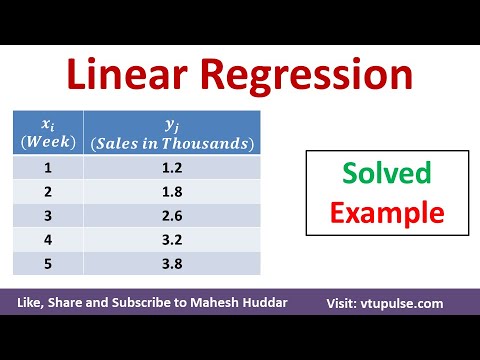

Linear Regression Algorithm – Solved Numerical Example in Machine Learning by Mahesh Huddar

Linear Regression with Python in 60 Seconds #shorts

Support Vector Machine (SVM) in 2 minutes

Machine Learning is just Linear Regression on Steroids

🔴Machine Learning Free Full Course 10 Hours

Multiple Linear Regression Solved Numerical Example in Machine Learning Data Mining by Mahesh Huddar

Machine Learning Tutorial Python - 3: Linear Regression Multiple Variables

Python Machine Learning Tutorial #3 - Linear Regression p.2

All Machine Learning Models Explained in 5 Minutes | Types of ML Models Basics

Multiple Linear Regression By Hand (formula): Solved Problem

K-Fold Cross Validation - Intro to Machine Learning

Machine Learning Tutorial Python - 2: Linear Regression Single Variable

Linear Regression vs. Logistic Regression [in 60 sec.] #shorts

Gilbert Strang: Linear Algebra vs Calculus

Linear Algebra for Machine Learning: Dot product and angle between 2 vectors Lecture 3

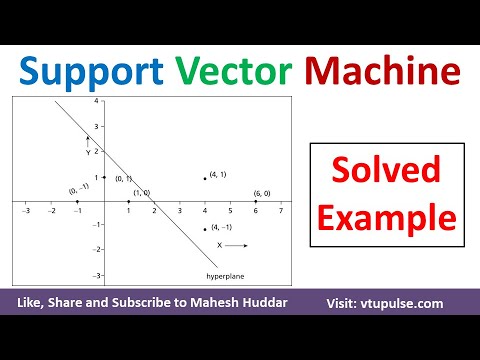

How to draw a hyper plane in Support Vector Machine | Linear SVM – Solved Example by Mahesh Huddar

What is backpropagation really doing? | Chapter 3, Deep learning

But what is a neural network? | Chapter 1, Deep learning

How to Answer Any Question on a Test

When To Use Regression|Linear Regression Analysis|Machine Learning Algorithms

Комментарии

0:02:34

0:02:34

0:28:02

0:28:02

0:22:44

0:22:44

0:04:44

0:04:44

0:05:30

0:05:30

0:01:00

0:01:00

0:02:19

0:02:19

0:00:50

0:00:50

9:35:08

9:35:08

0:08:10

0:08:10

0:14:08

0:14:08

0:16:59

0:16:59

0:05:01

0:05:01

0:42:24

0:42:24

0:02:42

0:02:42

0:15:14

0:15:14

0:01:00

0:01:00

0:02:14

0:02:14

0:13:26

0:13:26

0:08:27

0:08:27

0:12:47

0:12:47

0:18:40

0:18:40

0:00:27

0:00:27

0:20:11

0:20:11