filmov

tv

Depth Anything - Generating Depth Maps from a Single Image with Neural Networks

Показать описание

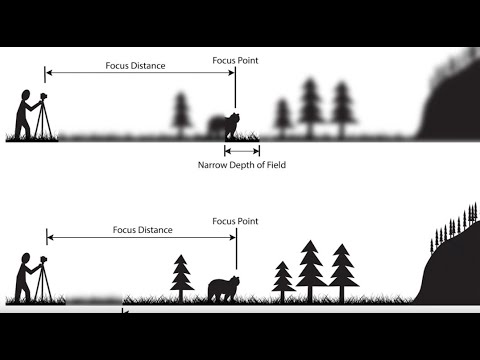

This week we cover the "Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data" paper from TikTok, The University of Hong Kong, Zhejiang Lab, and Zhejiang University. In this paper, they create a large dataset of labeled and unlabeled imagery to train a neural network for depth estimation from a single image, without any extra hardware or algorithmic complexity.

--

--

--

Chapters

0:00 Intro to Depth Anything

2:00 Use Cases

3:10 Real World Example

5:12 What is a Depth Map?

7:00 Crash Course in Traditional Techniques

9:42 Enter Depth Anything

16:00 Learning from the Teacher Model

18:35 DINOv2 Model

19:18 Depth Anything Architecture

21:29 Evaluation

25:55 Ablation Studies

28:22 Data, Perturbations, Feature Loss

31:15 Qualitative Results

33:00 Limitations

--

--

--

Chapters

0:00 Intro to Depth Anything

2:00 Use Cases

3:10 Real World Example

5:12 What is a Depth Map?

7:00 Crash Course in Traditional Techniques

9:42 Enter Depth Anything

16:00 Learning from the Teacher Model

18:35 DINOv2 Model

19:18 Depth Anything Architecture

21:29 Evaluation

25:55 Ablation Studies

28:22 Data, Perturbations, Feature Loss

31:15 Qualitative Results

33:00 Limitations

Best Depth Estimation Models (MiDaS, Depth Pro, Depth Anything v2, DepthCrafter, Marigold, Metric3D)

Depth-Anything for A1111 and InstantID

Depth Of Field | Depth Anything

Depth Scanner for After Effects

Animating Environments with Depth Maps - Lazy Tutorials

New Depth Anything Model Released | GenAI News CW4 #aigenerated

Depth Camera - Computerphile

3D Laser Engraving | How To Make A Depth Map Out Of Almost Anything | STL to Depth Map | Tutorial

Gesso! Before you even start to paint!

I tried coding a AI DEPTH VISION app with MIDAS in 15 Minutes

Depth Creates Attention

Depth Estimation with OpenCV Python for 3D Object Detection

Boosting Monocular Depth Estimation to High Resolution (CVPR 2021)

Mixing with Depth w/ Chris Lord-Alge | Ep. 1 – The Concept

How I create depth maps

How to create DEPTH in your writing (easy method to make your novels and stories more immersive!)

The Illusion of Depth - Contrast, Aerial Perspective and Form

DEPTH MAP in DaVinci Resolve 18 VS $2,000 Lens | How Good is the Depth Map for Depth of Field?

Creating 3D Space In A Mix | Depth, Width & Height

Add Depth to Drawings FAST

Add depth to your drums 🥁

Depth of Field: An Easy Overview (2023)

Adding Space & Depth To Your Tracks | You Suck at Producing #53

After Effects Depth Scanner Plugin Tutorial | PremiumBeat.com

Комментарии

0:07:25

0:07:25

0:10:40

0:10:40

0:01:23

0:01:23

0:00:26

0:00:26

0:00:47

0:00:47

0:00:20

0:00:20

0:12:34

0:12:34

0:14:52

0:14:52

0:00:28

0:00:28

0:17:51

0:17:51

0:00:46

0:00:46

0:32:48

0:32:48

0:04:51

0:04:51

0:10:18

0:10:18

0:00:37

0:00:37

0:09:41

0:09:41

0:09:17

0:09:17

0:16:06

0:16:06

0:05:21

0:05:21

0:00:59

0:00:59

0:00:56

0:00:56

0:03:47

0:03:47

0:06:14

0:06:14

0:21:09

0:21:09