filmov

tv

Deploying Serverless Inference Endpoints

Показать описание

VIDEO RESOURCES:

OTHER TRELIS LINKS:

TIMESTAMPS:

0:00 Deploying a Serverless API Endpoint

0:17 Serverless Demo

1:19 Video Overview

1:44 Serverless Use Cases

3:31 Setting up a Serverless API

13:31 Inferencing a Serverless Endpoint

17:55 Serverless Costs versus GPU Rental

20:02 Accessing Instructions and Scripts

Deploying Serverless Inference Endpoints

How I deploy serverless containers for free

Introduction to Amazon SageMaker Serverless Inference | Concepts & Code examples

AWS re:Invent 2021 - Serverless Inference on SageMaker! FOR REAL!

Serverless was a big mistake... says Amazon

AWS On Air ft. Amazon Sagemaker Serverless Inference

AWS On Air ft. Amazon SageMaker Serverless Inference | AWS Events

AWS re:Invent 2021 - {New Launch} Amazon SageMaker serverless inference (Preview)

AWS re:Invent 2022 - Deploy ML models for inference at high performance & low cost, ft AT&T ...

Deploy LLMs using Serverless vLLM on RunPod in 5 Minutes

AWS Summit DC 2022 - Amazon SageMaker Inference explained: Which style is right for you?

#3-Deployment Of Huggingface OpenSource LLM Models In AWS Sagemakers With Endpoints

Deploy Your ML Models to Production at Scale with Amazon SageMaker

Amazon SageMaker ML Inference | Amazon Web Services

AWS On Air San Fran Summit 2022 ft. Amazon SageMaker Serverless Inference

Hugging Face Inference Endpoints live launch event recorded on 9/27/22

AWS re:Invent 2020: How CATCH FASHION built a serverless ML inference service with AWS Lambda

Serverless ML Inference at Scale with Rust, ONNX Models on AWS Lambda + EFS

AWS re:Invent 2020: Deploying PyTorch models for inference using TorchServe

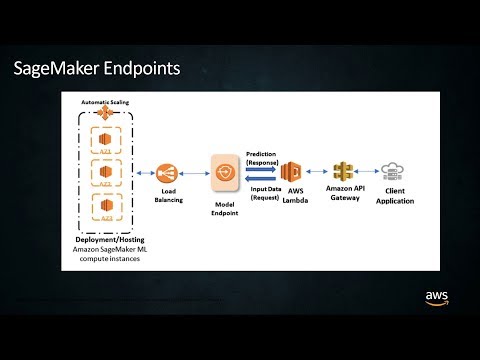

How to build ML Architecture with AWS SageMaker + Lambda + API Gateway | HANDS-ON TUTORIAL

Microservices Explained in 5 Minutes

🆕 HTTPS endpoints for your AWS Lambda functions with Function URLs

How to serve your ComfyUI model behind an API endpoint

Deploy ML model in 10 minutes. Explained

Комментарии

0:20:18

0:20:18

0:06:33

0:06:33

0:22:13

0:22:13

0:25:16

0:25:16

0:03:48

0:03:48

0:19:28

0:19:28

0:19:53

0:19:53

0:37:22

0:37:22

0:54:07

0:54:07

0:14:13

0:14:13

0:51:11

0:51:11

0:22:32

0:22:32

0:07:53

0:07:53

0:02:00

0:02:00

0:22:20

0:22:20

0:46:46

0:46:46

0:17:14

0:17:14

0:09:39

0:09:39

0:32:49

0:32:49

0:21:44

0:21:44

0:05:17

0:05:17

0:20:12

0:20:12

0:08:19

0:08:19

0:12:41

0:12:41