filmov

tv

AWS re:Invent 2021 - Serverless Inference on SageMaker! FOR REAL!

Показать описание

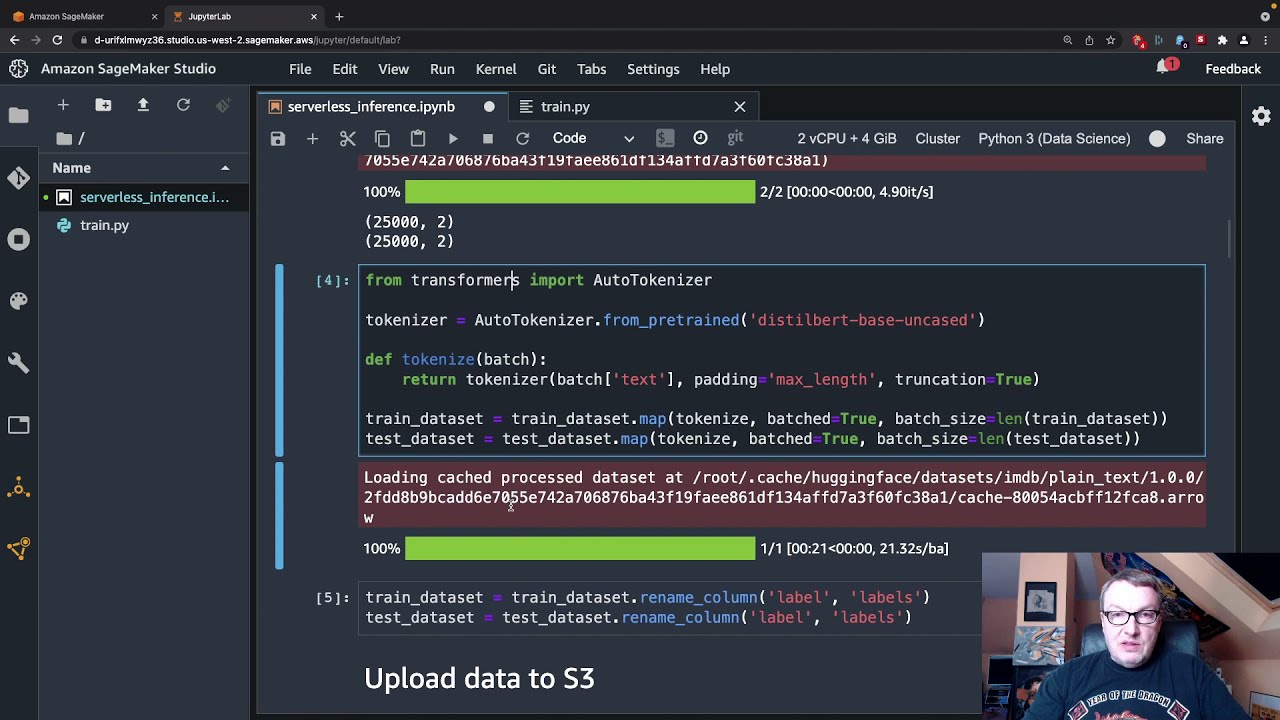

At long last, Amazon SageMaker supports serverless endpoints. In this video, I demo this newly launched capability, named Serverless Inference.

Starting from a pre-trained DistilBERT model on the Hugging Face model hub, I fine-tune it for sentiment analysis on the IMDB movie review dataset. Then, I deploy the model to a serverless endpoint, and I run multi-threaded benchmarks with short and long token sequences. Finally, I plot latency numbers and compute latency quantiles.

*** Erratum: max concurrency factor is 50, not 40.

⭐️⭐️⭐️ Don't forget to subscribe to be notified of future videos ⭐️⭐️⭐️

Starting from a pre-trained DistilBERT model on the Hugging Face model hub, I fine-tune it for sentiment analysis on the IMDB movie review dataset. Then, I deploy the model to a serverless endpoint, and I run multi-threaded benchmarks with short and long token sequences. Finally, I plot latency numbers and compute latency quantiles.

*** Erratum: max concurrency factor is 50, not 40.

⭐️⭐️⭐️ Don't forget to subscribe to be notified of future videos ⭐️⭐️⭐️

AWS re:Invent 2021 - Serverless security best practices

AWS re:Invent 2021 - Deep dive: Large-scale modernization to serverless in action

AWS re:Invent 2021 - {New Launch} Amazon SageMaker serverless inference (Preview)

AWS re:Invent 2021 - Accelerating your serverless journey with AWS Lambda

AWS re:Invent 2021 - What’s new in serverless

AWS re:Invent 2021 - {New Launch} Introducing Amazon Redshift Serverless

AWS re:Invent 2021 - Architecting your serverless applications for hyperscale [REPEAT]

AWS re:Invent 2021 - Getting started building your first serverless application

AWS re:Invent 2021 - Neiman Marcus and Waitrose: Utilizing serverless microservices

AWS re:Invent 2021 - Best practices of advanced serverless developers [REPEAT]

AWS re:Invent 2021 - Building real-world serverless applications with AWS SAM and Capital One

AWS re:Invent 2021 - {New Launch} Introducing Amazon EMR Serverless

AWS re:Invent 2021 - Build high-performance .NET serverless architectures on AWS

AWS re:Invent 2021 - Productizing a serverless MVP

AWS re:Invent 2021 - Best practices for building interactive applications with AWS Lambda

AWS re:Invent 2021 - Inside a working serverless SaaS reference solution

Demo of Amazon Redshift Serverless. re:Invent 2021 recaps

AWS re:Invent 2021 - Building a serverless banking as a service platform on AWS

AWS re:Invent 2021 - Using events and workflows to build distributed applications

AWS re:Invent 2021 - Instant and fine-grained scaling with Amazon Aurora Serverless v2

AWS re:Invent 2021 - AWS storage solutions for containers and serverless applications [REPEAT]

AWS re:Invent 2021 - Reinvent your business for the future with AWS Analytics

AWS re:Invent 2020: Getting started building your first serverless web application

AWS re:Invent 2021 - {New Launch} Introducing Amazon MSK Serverless

Комментарии

0:51:09

0:51:09

0:53:43

0:53:43

0:37:22

0:37:22

0:51:07

0:51:07

0:46:48

0:46:48

1:01:10

1:01:10

0:55:10

0:55:10

0:33:00

0:33:00

0:56:11

0:56:11

0:59:25

0:59:25

0:51:39

0:51:39

0:46:13

0:46:13

0:49:36

0:49:36

0:37:01

0:37:01

0:52:02

0:52:02

0:48:51

0:48:51

0:01:38

0:01:38

0:39:49

0:39:49

0:47:04

0:47:04

0:48:36

0:48:36

0:59:04

0:59:04

1:08:38

1:08:38

0:25:03

0:25:03

0:40:31

0:40:31