filmov

tv

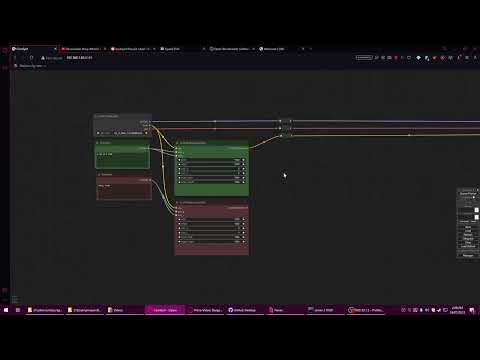

ComfyUI Tutorial Series Ep 29: How to Replace Backgrounds with AI

Показать описание

In this episode, learn how to use ComfyUI to remove and replace backgrounds on product images, portraits, or pets! This step-by-step guide covers everything from masking techniques to generating creative backgrounds using AI and prompts.

What’s Included:

- Removing complex or white backgrounds.

- Setting proper dimensions for best results.

- Creating and inverting alpha masks for precise edits.

- Combining AI tools like Control Net and inpainting for seamless background swaps.

- Fixing imperfections with Photoshop.

- Enhancing images with detailed prompts and upscalers.

Other Episodes to Watch: Episode 14, 19, 22

Get the workflows and instructions from discord

Check Other Episodes

Unlock exclusive perks by joining our channel:

#replacebackground #comfyui #aitutorial

What’s Included:

- Removing complex or white backgrounds.

- Setting proper dimensions for best results.

- Creating and inverting alpha masks for precise edits.

- Combining AI tools like Control Net and inpainting for seamless background swaps.

- Fixing imperfections with Photoshop.

- Enhancing images with detailed prompts and upscalers.

Other Episodes to Watch: Episode 14, 19, 22

Get the workflows and instructions from discord

Check Other Episodes

Unlock exclusive perks by joining our channel:

#replacebackground #comfyui #aitutorial

ComfyUI Tutorial Series Ep 29: How to Replace Backgrounds with AI

ComfyUI Tutorial Series Ep17 Flux LoRA Explained! Best Settings & New UI

ComfyUI Tutorial Series: Ep19 - SDXL & Flux Inpainting Tips with ComfyUI

DNB comfyui-animatediff #animatediff #comfyui

ComfyUI Tutorial Series: Ep21 - How to Use OmniGen in ComfyUI

ComfyUI Tutorial Series: Ep07 - Working With Text - Art Styles Update

ComfyUI Advanced Understanding Part 3

ComfyUI Tutorial Series: Ep08 - Flux 1: Schnell and Dev Installation Guide

How to make AI comic page under few minutes | REUPLOAD

Stable Diffusion In The Cloud #stablediffusion #ai

STOP Using Automatic1111 and ComfyUI for Stable diffusion SDXL. New best alternative SwarmUI! +Colab

Easy Face Swaps in ComfyUI with Reactor

SDXL ComfyUI img2img - A simple workflow for image 2 image (img2img) with the SDXL diffusion model

Top 15 Greatest Manga of all time

How To Install ComfyUI And The ComfyUI Manager

ComfyUI Tutorial For Beginners | Work Flow Part 2

ComfyUI SDXL Basic Setup Part 1

Comfyui Wizarding 101 #comfyui #artificialintelligence #animation

How to View Ksampler Preview Images in ComfyUI | Easy Guide

ComfyUI Tutorial - How to Install ComfyUI on Windows, RunPod & Google Colab | Stable Diffusion S...

ComfyUI and Chill

COMFYUI WORKFLOW INCLUDED (VIDEO TO VIDEO) stable diffusion (vide processed)

How To Install ComfyUI and Run SDXL on Low GPUs

Easy Solution for 'Module 'cv2' Has No Attribute 'Resize'' Error in Co...

Комментарии

0:21:51

0:21:51

0:21:33

0:21:33

0:20:16

0:20:16

0:00:11

0:00:11

0:13:29

0:13:29

0:12:09

0:12:09

0:35:36

0:35:36

0:29:56

0:29:56

0:01:36

0:01:36

0:00:32

0:00:32

0:12:09

0:12:09

0:13:15

0:13:15

0:07:39

0:07:39

0:00:55

0:00:55

0:12:06

0:12:06

0:09:29

0:09:29

0:13:05

0:13:05

0:29:01

0:29:01

0:07:42

0:07:42

0:47:42

0:47:42

0:29:06

0:29:06

0:00:08

0:00:08

0:11:17

0:11:17

0:01:23

0:01:23