filmov

tv

Kubernetes pod autoscaling for beginners

Показать описание

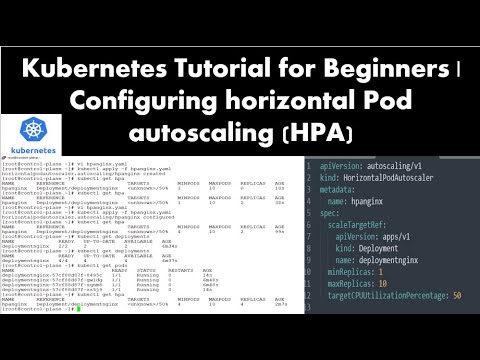

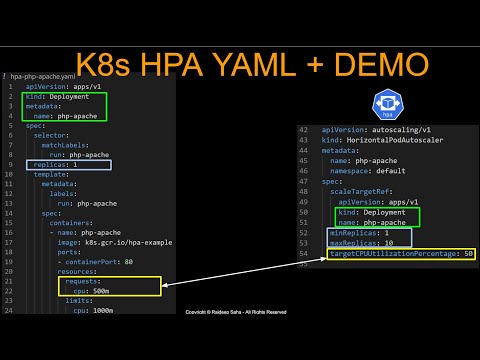

In this episode, were taking a look at how to scale pods on Kubernetes based on CPU or Memory usage. This feature in Kubernetes is called the Horizontal Pod autoscaler.

Before scaling its important to understand your resource usage for the service you wish to scale.

We take a look at resource requests and limits and how they play a key role in autoscaling.

Checkout the source code below 👇🏽 and follow along 🤓

Also if you want to support the channel further, become a member 😎

Checkout "That DevOps Community" too

Source Code 🧐

--------------------------------------------------------------

If you are new to Kubernetes, check out my getting started playlist on Kubernetes below :)

Kubernetes Guide for Beginners:

---------------------------------------------------

Kubernetes Monitoring Guide:

-----------------------------------------------

Kubernetes Secret Management Guide:

--------------------------------------------------------------

Like and Subscribe for more :)

Follow me on socials!

Music:

Комментарии

0:13:22

0:13:22

0:12:55

0:12:55

0:19:07

0:19:07

0:05:31

0:05:31

0:07:18

0:07:18

0:08:33

0:08:33

0:14:38

0:14:38

0:30:55

0:30:55

0:00:57

0:00:57

0:22:12

0:22:12

0:04:35

0:04:35

0:21:01

0:21:01

0:01:00

0:01:00

0:01:01

0:01:01

0:13:37

0:13:37

0:04:00

0:04:00

0:14:11

0:14:11

0:10:42

0:10:42

0:28:05

0:28:05

0:22:38

0:22:38

0:11:02

0:11:02

0:14:16

0:14:16

0:25:50

0:25:50

0:01:00

0:01:00