filmov

tv

NVIDIA TensorRT 8 Released Today: High Performance Deep Neural Network Inference

Показать описание

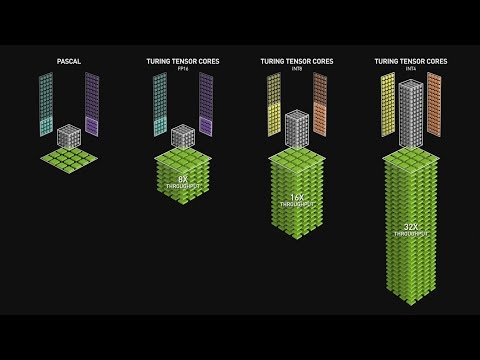

NVIDIA TensorRT allows high-performance inference of TensorFlow and PyTorch neural networks. Today NVIDIA released version 8 of this amazing framework. The new version includes sparsity, which optimizations to prune weak connections that do not contribute to the overall calculation of the network. TensorRT 8 allows transformer optimizations and BERT-Large achievement.

Getting Started with TensorRT 8:

Installation Instructions for Windows, Linux and Cloud:

0:44 Quantized Network (QAT)

0:55 Sparsity

1:46 Setup TensorRT

3:10 Using the TensorRT 8 Jupyter 8 Notebook

6:21 Query BERT

6:54 Ask BERT your Own Question

8:00 BERT Weaknesses

* Follow Me on Social Media!

Getting Started with TensorRT 8:

Installation Instructions for Windows, Linux and Cloud:

0:44 Quantized Network (QAT)

0:55 Sparsity

1:46 Setup TensorRT

3:10 Using the TensorRT 8 Jupyter 8 Notebook

6:21 Query BERT

6:54 Ask BERT your Own Question

8:00 BERT Weaknesses

* Follow Me on Social Media!

NVIDIA TensorRT 8 Released Today: High Performance Deep Neural Network Inference

NVIDIA TensorRT 8 Is Out. Here Is What You Need To Know.

Introduction to NVIDIA TensorRT for High Performance Deep Learning Inference

NVIDIA TensorRT 8 New Release - Presentation of Highlights #Deep Learning

Activity Recognition | NVIDIA TensorRT 8 | RTX ON

Llama 3.1 is INSANELY fast 🤖👩💻 #AIDecoded #nvidiapartner #coder #software #technology #code...

20 Installing and using Tenssorrt For Nvidia users

What is TensorRT?

3 Ways To Get Started with TensorRT using TensorFlow #nvidia #tensorflow #tensorrt

NVidia TensorRT: high-performance deep learning inference accelerator (TensorFlow Meets)

Testing Stable Diffusion Inference Performance with Latest NVIDIA Driver including TensorRT ONNX

Why Nvidia Is Stuck with Tensor/RT till 2021

How To Increase Inference Performance with TensorFlow-TensorRT

NVIDIA AI Revolutionizes Inference: TensorRT Model Optimizer for GPU Efficiency

ComfyUI: nVidia TensorRT (Workflow Tutorial)

Real-Time Artistic Style Transfer with PyTorch, ONNX and NVIDIA TensorRT

NVIDIA DeepStream Technical Deep Dive: DeepStream Inference Options with Triton & TensorRT

Tensor Cores in a Nutshell

Inference Optimization with NVIDIA TensorRT

NVIDIA Developer How To Series: Accelerating Recommendation Systems with TensorRT

NVIDIA/Stable-Diffusion-WebUI-TensorRT - Gource visualisation

NVIDIA Announces New AI Inference Platform

Getting Started with TensorRT-LLM

NanoSAM: Lightweight AI Segmentation Model (Runs on CPU)

Комментарии

0:08:53

0:08:53

0:05:08

0:05:08

0:01:22

0:01:22

0:04:14

0:04:14

0:01:17

0:01:17

0:01:00

0:01:00

0:18:40

0:18:40

0:01:08

0:01:08

0:00:16

0:00:16

0:08:07

0:08:07

0:10:57

0:10:57

0:15:11

0:15:11

0:06:18

0:06:18

0:03:20

0:03:20

0:45:25

0:45:25

0:01:01

0:01:01

0:37:50

0:37:50

0:03:40

0:03:40

0:36:28

0:36:28

0:05:33

0:05:33

0:00:15

0:00:15

0:01:32

0:01:32

0:14:21

0:14:21

0:10:40

0:10:40