filmov

tv

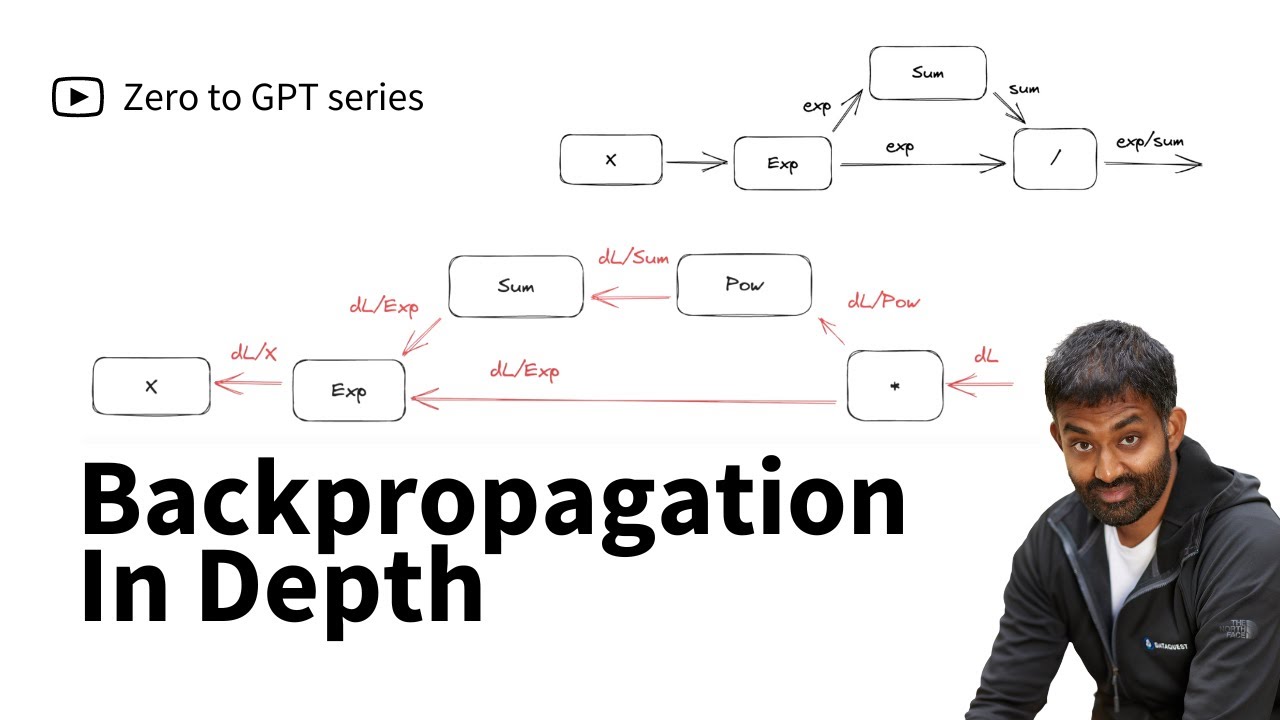

Backpropagation In Depth

Показать описание

In this video, we'll take a deep dive into backpropagation to understand how data flows in a neural network. We'll learn how to break functions into operations, then use those operations to build a computational graph. At the end, we'll build a miniature PyTorch to implement a neural network.

Chapters:

00:00 Introduction

6:19 Staged softmax forward pass

9:24 Staged softmax backward pass

23:19 Analytic softmax

29:30 Softmax computational graph

42:26 Neural network computational graph

This video is part of our new course, Zero to GPT - a guide to building your own GPT model from scratch. By taking this course, you'll learn deep learning skills from the ground up. Even if you're a complete beginner, you can start with the prerequisites we offer at Dataquest to get you started.

If you're dreaming of building deep learning models, this course is for you.

Best of all, you can access the course for free while it's still in beta!

Sign up today!

Chapters:

00:00 Introduction

6:19 Staged softmax forward pass

9:24 Staged softmax backward pass

23:19 Analytic softmax

29:30 Softmax computational graph

42:26 Neural network computational graph

This video is part of our new course, Zero to GPT - a guide to building your own GPT model from scratch. By taking this course, you'll learn deep learning skills from the ground up. Even if you're a complete beginner, you can start with the prerequisites we offer at Dataquest to get you started.

If you're dreaming of building deep learning models, this course is for you.

Best of all, you can access the course for free while it's still in beta!

Sign up today!

Backpropagation, step-by-step | DL3

Backpropagation In Depth

Backpropagation in Neural Networks | Back Propagation Algorithm with Examples | Simplilearn

Tutorial 31- Back Propagation In Recurrent Neural Network

Backpropagation Explained

#1 Solved Example Back Propagation Algorithm Multi-Layer Perceptron Network by Dr. Mahesh Huddar

How Backpropagation Works

Back Propagation Derivation for Feed Forward Artificial Neural Networks

Neural Networks explained in 60 seconds!

PyTorch Autograd Explained - In-depth Tutorial

Backpropagation In Depth Tutorial (beginner friendly) | NNE Part 10 | Pooky Codes

MIT 6.S094: Recurrent Neural Networks for Steering Through Time

BACKPROPAGATION IN NEURAL NETWORK | Explained with Solved Simple Example | DEEP LEARNING | SATYAJIT

Coding Train Live 115 - Backpropagation

Building makemore Part 4: Becoming a Backprop Ninja

Learning Forever, Backprop Is Insufficient

Sideways: Depth-Parallel Training of Video Models

Backpropagation in CNN | Part 1 | Deep Learning

Lecture 5: Backpropagation and Project Advice

Tutorial 6-Chain Rule of Differentiation with BackPropagation

Backpropagation in CNN - Part 1

But what is a neural network? | Deep learning chapter 1

Gradient Descent in 3 minutes

Top 3 books for Machine Learning

Комментарии

0:12:47

0:12:47

0:57:02

0:57:02

0:06:48

0:06:48

0:07:30

0:07:30

0:10:54

0:10:54

0:14:31

0:14:31

0:18:50

0:18:50

0:50:31

0:50:31

0:01:00

0:01:00

0:13:42

0:13:42

0:30:08

0:30:08

1:15:59

1:15:59

0:26:59

0:26:59

2:50:00

2:50:00

1:55:24

1:55:24

0:26:29

0:26:29

0:00:59

0:00:59

0:36:21

0:36:21

1:18:20

1:18:20

0:13:43

0:13:43

0:20:03

0:20:03

0:18:40

0:18:40

0:03:06

0:03:06

0:00:59

0:00:59