filmov

tv

100% Offline ChatGPT Alternative?

Показать описание

In this video I show I was able to install an open source Large Language Model (LLM) called h2oGPT on my local computer for 100% private, 100% local chat with a GPT.

Links

Timeline:

00:00 100% Local Private GPT

01:01 Try h2oGPT Now

02:03 h2oGPT Github and Paper

03:11 Model Parameters

04:18 Falcon Foundational Models

06:34 Cloning the h2oGPT Repo

07:30 Installing Requirements

09:48 Running CLI

11:13 Running h2oGPT UI

12:20 Linking to Local Files

14:14 Why Open Source LLMs?

Links to my stuff:

Links

Timeline:

00:00 100% Local Private GPT

01:01 Try h2oGPT Now

02:03 h2oGPT Github and Paper

03:11 Model Parameters

04:18 Falcon Foundational Models

06:34 Cloning the h2oGPT Repo

07:30 Installing Requirements

09:48 Running CLI

11:13 Running h2oGPT UI

12:20 Linking to Local Files

14:14 Why Open Source LLMs?

Links to my stuff:

100% Offline ChatGPT Alternative?

Jan is an open-source ChatGPT alternative that runs 100% offline on your computer

100 Offline ChatGPT Alternative

This new AI is powerful and uncensored… Let’s run it

Forget ChatGPT, Try These 7 Free AI Tools!

DIE BESTE CHATGPT ALTERNATIVE - Kostenloser Offline Chatbot für Windows und Mac mit GPT4All

This Unknown AI Will Blow Your Mind! BYE CHATGPT…

Local ChatGPT FREE on Your Laptop! 🤯 GPT4All 1-Click Install - Local, Offline, ChatGPT

Download ChatGPT Alternative for PC (Free, Offline & Cross Platform)

Open Source ChatGPT Alternative That Runs 100% Offline !! [ JAN AI ]

I Tricked ChatGPT to Think 9 + 10 = 21

Don't use ChatGPT until you've watched this video!

Run a GOOD ChatGPT Alternative Locally! - LM Studio Overview

THE BEST ALTERNATIVE TO CHATGPT LLM ARTIFICIAL INTELLIGENCE. 100% PRIVACY OFFLINE

LocalGPT: OFFLINE CHAT FOR YOUR FILES [Installation & Code Walkthrough]

most dangerous Virus in Windows 10

Using ChatGPT with YOUR OWN Data. This is magical. (LangChain OpenAI API)

A ChatGPT Alternative That's Free & Open Source!

ChatGPT wird dumm. Die beste Alternative: FreedomGPT #chatgpt #feelsillegal

How To Install PrivateGPT - Chat With PDF, TXT, and CSV Files Privately! (Quick Setup Guide)

GPT4ALL: Die Offline-Alternative zu ChatGPT?

Open Source Chatgpt Alternative 100% Free

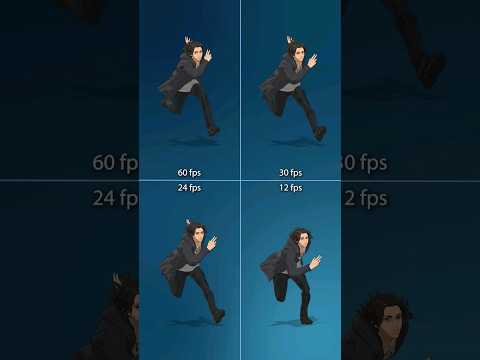

fps comparison, can you notice the difference? #60fps #animation #attackontitan #shingekinokyojin

I Can Save You Money! – Raspberry Pi Alternatives

Комментарии

0:16:01

0:16:01

0:05:24

0:05:24

0:13:17

0:13:17

0:04:37

0:04:37

0:08:39

0:08:39

0:10:45

0:10:45

0:09:06

0:09:06

0:05:20

0:05:20

0:04:54

0:04:54

0:08:13

0:08:13

0:00:10

0:00:10

0:01:00

0:01:00

0:15:16

0:15:16

0:23:37

0:23:37

0:17:11

0:17:11

0:00:29

0:00:29

0:16:29

0:16:29

0:17:28

0:17:28

0:00:32

0:00:32

0:05:12

0:05:12

0:10:32

0:10:32

0:12:17

0:12:17

0:00:12

0:00:12

0:15:04

0:15:04