filmov

tv

AI Just Solved a 53-Year-Old Problem! | AlphaTensor, Explained

Показать описание

In a year where we've seen Artificial intelligence move forward in a significant way, AlphaTensor is the most exciting breakthrough we have accomplished.

This video briefly introduces AlphaTensor and what it means to us.

📚 My 3 favorite Machine Learning books:

Disclaimer: Some of the links included in this description are affiliate links where I'll earn a small commission if you purchase something. There's no cost to you.

This video briefly introduces AlphaTensor and what it means to us.

📚 My 3 favorite Machine Learning books:

Disclaimer: Some of the links included in this description are affiliate links where I'll earn a small commission if you purchase something. There's no cost to you.

AI Just Solved a 53-Year-Old Problem! | AlphaTensor, Explained

Google DeepMind's AlphaProof MASSIVE MATH BREAKTHROUGH - AI teaches itself mathematical proofs

AI to the Rescue: Solving Problems Effortlessly 💪💻

AlphaProof & AlphaGeometry 2 achieve a silver-medal score in IMO! (Explainer)

DeepMind’s game-playing AI has beaten a 50-year-old record in computer science

How AI Discovered a Faster Matrix Multiplication Algorithm

WHY I HATE MATH 😭 #Shorts

AI can solve problems, but some worry about its dangers.

AI Explained: Productionizing GenAI at Scale

Solve Any Math Problem in 5 SECONDS😲🔥 | AI Tools #viral #shorts

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

3 AI Websites That Will Blow your Mind!

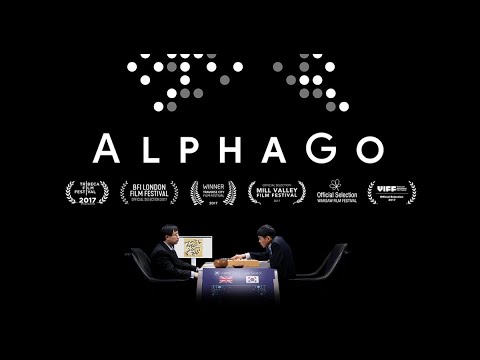

AlphaGo - The Movie | Full award-winning documentary

solving tricky math problem using chatGPT #chatgpt #chatgpt3 #chatgpttutorial #ai #aiforall #maths

Stunning AI shows how it would kill 90%. w Elon Musk.

DeepMind's AlphaTensor AI is a Game-changer !

How AI Can Solve OUR Everyday Problems

chat gpt solved cse 3rd sem math question 😱🤧 #chatgpt

Will Your Code Write Itself?

ChatGPT helped solve a mysterious murder case | Box of ChatGPT

Science rejuvenates woman's skin cells to 30 years younger - BBC News

Forget ChatGPT, Try These 7 Free AI Tools!

Unlocking the Secret to Solving Problems with AI - You Won't Believe What Happens Next! #ai

CEO Of Microsoft AI: AI Is Becoming More Dangerous And Threatening! - Mustafa Suleyman

Комментарии

0:08:17

0:08:17

0:17:18

0:17:18

0:00:12

0:00:12

0:06:47

0:06:47

0:02:13

0:02:13

0:13:00

0:13:00

0:00:24

0:00:24

0:00:35

0:00:35

0:48:52

0:48:52

0:00:40

0:00:40

0:05:45

0:05:45

0:00:58

0:00:58

1:30:28

1:30:28

0:00:25

0:00:25

0:15:59

0:15:59

0:11:31

0:11:31

0:03:53

0:03:53

0:00:24

0:00:24

0:10:05

0:10:05

0:01:13

0:01:13

0:02:21

0:02:21

0:08:39

0:08:39

0:01:13

0:01:13

1:46:05

1:46:05