filmov

tv

Nesterov Accelerated Gradient NAG Optimizer

Показать описание

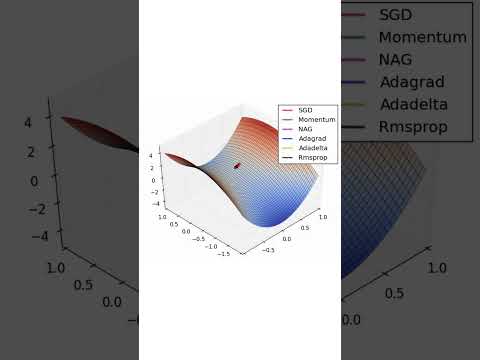

The Nesterov Accelerated Gradient (NAG) Optimizer is an advanced optimization algorithm used in machine learning to speed up gradient descent. It's an enhancement of the standard momentum method, distinguished by its ability to "look ahead" by adjusting its gradients based on a future predicted position. This foresight is achieved by incorporating a fraction of the previous update step into the current gradient computation. By doing so, NAG reduces overshooting and oscillations during optimization, leading to faster convergence towards the minimum of a loss function. It's particularly effective for training deep neural networks, where the landscape of the loss function can be complex.

Nesterov Accelerated Gradient NAG Optimizer

Nesterov Accelerated Gradient (NAG) Explained in Detail | Animations | Optimizers in Deep Learning

MOMENTUM Gradient Descent (in 3 minutes)

Lecture 43 : Optimisers: Momentum and Nesterov Accelerated Gradient (NAG) Optimiser

Nesterov Accelerated Gradient (NAG) Optimizer Explained & It's Derivative

Lecture 43 Optimisers Momentum and Nesterov Accelerated Gradient NAG Optimiser

Deep Learning(CS7015): Lec 5.5 Nesterov Accelerated Gradient Descent

Nesterov Accelerated Gradient from Scratch in Python

Accelerate Your ML Models: Mastering SGD with Momentum and Nesterov Accelerated Gradient

CS 152 NN—8: Optimizers—Nesterov with momentum

Coding Nesterov Accelerated Gradient (NAG) Optimizer in PyTorch: Step-by-Step Guide

Nesterov's Accelerated Gradient

Nesterov Accelarated Gradient Descent

ODE of Nesterov's accelerated gradient

Optimization for Deep Learning (Momentum, RMSprop, AdaGrad, Adam)

23. Accelerating Gradient Descent (Use Momentum)

On momentum methods and acceleration in stochastic optimization

Gradient Descent with Momentum and Nesterov's Accelerated Gradient

Nesterov's Accelerated Gradient Method - Part 1

optimizers comparison: adam, nesterov, spsa, momentum and gradient descent.

CS 152 NN—8: Optimizers—SGD with Nesterov momentum

How is Nesterov's Accelerated Gradient Descent implemented in Tensorflow?

Who's Adam and What's He Optimizing? | Deep Dive into Optimizers for Machine Learning!

Gradient descent with momentum

Комментарии

0:00:39

0:00:39

0:27:50

0:27:50

0:03:18

0:03:18

0:27:52

0:27:52

0:19:50

0:19:50

0:27:52

0:27:52

0:11:59

0:11:59

0:12:55

0:12:55

0:10:39

0:10:39

0:02:18

0:02:18

0:06:33

0:06:33

0:04:13

0:04:13

0:14:05

0:14:05

0:08:52

0:08:52

0:15:52

0:15:52

0:49:02

0:49:02

0:51:33

0:51:33

0:25:39

0:25:39

0:15:09

0:15:09

0:01:25

0:01:25

0:05:58

0:05:58

0:05:36

0:05:36

0:23:20

0:23:20

0:00:56

0:00:56