filmov

tv

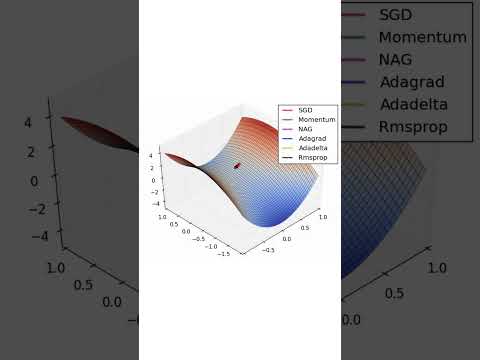

Nesterov Accelerated Gradient from Scratch in Python

Показать описание

Momentum is great, however if the gradient descent steps could slow down when it gets to the bottom of a minima that would be even better. This is Nesterov Accelerated Gradient in a nutshell, check it out!

## Credit

The music is taken from Youtube music!

## Table of Content

- Introduction:

- Theory:

- Python Implementation:

- Conclusion:

Here is an explanation of Nesterov Accelerated Gradient from that very cool blogpost mentioned in the credit section (check it out!):

"Nesterov accelerated gradient (NAG) [see reference] is a way to give our momentum term this kind of prescience. We know that we will use our momentum term γvt−1 to move the parameters θ. Computing θ−γvt−1 thus gives us an approximation of the next position of the parameters (the gradient is missing for the full update), a rough idea where our parameters are going to be. We can now effectively look ahead by calculating the gradient not w.r.t. to our current parameters θ but w.r.t. the approximate future position of our parameters:"

## Reference

Nesterov, Y. (1983). A method for unconstrained convex minimization problem with the rate of convergence o(1/k2). Doklady ANSSSR (translated as Soviet.Math.Docl.), vol. 269, pp. 543– 547

----

----

Follow Me Online Here:

___

Have a great week! 👋

## Credit

The music is taken from Youtube music!

## Table of Content

- Introduction:

- Theory:

- Python Implementation:

- Conclusion:

Here is an explanation of Nesterov Accelerated Gradient from that very cool blogpost mentioned in the credit section (check it out!):

"Nesterov accelerated gradient (NAG) [see reference] is a way to give our momentum term this kind of prescience. We know that we will use our momentum term γvt−1 to move the parameters θ. Computing θ−γvt−1 thus gives us an approximation of the next position of the parameters (the gradient is missing for the full update), a rough idea where our parameters are going to be. We can now effectively look ahead by calculating the gradient not w.r.t. to our current parameters θ but w.r.t. the approximate future position of our parameters:"

## Reference

Nesterov, Y. (1983). A method for unconstrained convex minimization problem with the rate of convergence o(1/k2). Doklady ANSSSR (translated as Soviet.Math.Docl.), vol. 269, pp. 543– 547

----

----

Follow Me Online Here:

___

Have a great week! 👋

Комментарии

0:12:55

0:12:55

0:11:59

0:11:59

0:08:52

0:08:52

0:02:18

0:02:18

0:04:13

0:04:13

0:00:39

0:00:39

0:27:50

0:27:50

0:03:18

0:03:18

0:14:05

0:14:05

0:10:39

0:10:39

0:15:09

0:15:09

0:09:59

0:09:59

0:27:52

0:27:52

0:25:39

0:25:39

0:27:52

0:27:52

0:01:25

0:01:25

0:07:28

0:07:28

0:49:02

0:49:02

0:06:11

0:06:11

0:08:40

0:08:40

0:21:57

0:21:57

0:05:58

0:05:58

0:05:36

0:05:36

0:13:19

0:13:19