filmov

tv

Analyzing a slow report query in DAX Studio

Показать описание

Analyzing a slow report query in DAX Studio

Why is my Power BI refresh so SLOW?!? 3 Bottlenecks for refresh performance

SLOW LOADING REPORTS? Use PERFORMANCE ANALYZER to look for bottlenecks // Power BI Guide

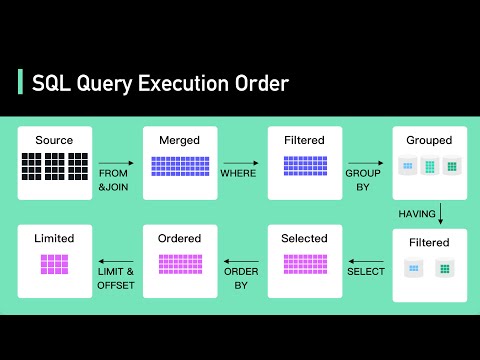

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

Reasons why your Power BI report is slow & how to optimize | Performance Tuning | MiTutorials

Debugging a slow Power BI report with Phil Seamark

BLAZING FAST DAX QUERIES | HOW TO OPTIMIZE SLOW POWER BI REPORTS AND DAX QUERIES

Course: Tuning a Stored Procedure - Finding the Slow Query with Query Store

Why is Power BI SLOW

How To Identify And Optimize Slow Power BI Visuals

3 signs it's time to OPTIMIZE your Power BI report

My Power BI report is slow: what should I do?

2 ways to reduce your Power BI dataset size and speed up refresh

How to See Where Your Oracle SQL Query is Slow

How To Optimize Slow Measures In Power BI

Q19:How would you troubleshoot a slow Power BI report?#troubleshooting #powerbi #datavisualization

Power BI Get Data: Import vs. DirectQuery vs. Live (2021)

Find your slow queries, and fix them! - Stephen Frost: PGCon 2020

Query Analysis Deep Dive: 13 Causes of a Slow Query - Fabiano Amorim

Slow Query Monitor: Optimize your eCommerce site’s performance with slow query identification + SQM...

Bert & Pinal Troubleshoot a Slow Performing SQL Server

10 Million Rows of data Analyzed using Excel's Data Model

Microsoft Power BI: My Power BI report is slow. What should I do? - BRK3022

My SQL Server is Slow

Комментарии

0:10:33

0:10:33

0:08:09

0:08:09

0:05:44

0:05:44

0:05:57

0:05:57

0:09:33

0:09:33

0:13:01

0:13:01

0:11:11

0:11:11

0:08:13

0:08:13

0:08:44

0:08:44

0:10:08

0:10:08

0:06:09

0:06:09

0:49:16

0:49:16

0:17:24

0:17:24

0:12:08

0:12:08

0:40:01

0:40:01

0:00:11

0:00:11

0:08:31

0:08:31

0:50:34

0:50:34

1:14:51

1:14:51

0:02:59

0:02:59

0:07:38

0:07:38

0:10:57

0:10:57

0:59:27

0:59:27

0:00:40

0:00:40