filmov

tv

Model calibration

Показать описание

Александр Лыжов, Samsung AI Center Moscow, Research Scientist

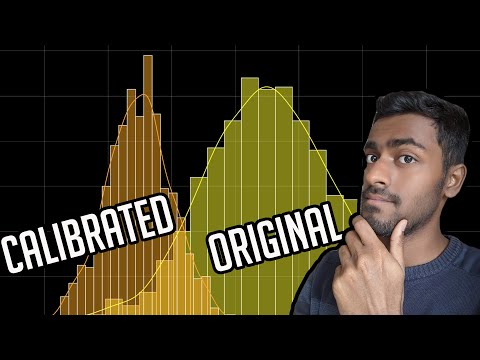

In many real-world applications we would like the probabilities that the model outputs (e.g. class probabilities in classification) to be correct in some sense (e.g. to match the actual probabilities of class occurrence). This property of models is called calibration. In this talk I will first do a introduction to various aspects of calibration: definitions of calibration errors, estimators of these errors, calibration of neural networks. Then I will talk about developments that occured in understanding of calibration in 2019 in depth. I want to focus on unbiased calibration estimators and hypothesis testing for calibration in particular. If we have time after that, we may talk about calibration of regression and differentiable calibration losses in neural network training.

In many real-world applications we would like the probabilities that the model outputs (e.g. class probabilities in classification) to be correct in some sense (e.g. to match the actual probabilities of class occurrence). This property of models is called calibration. In this talk I will first do a introduction to various aspects of calibration: definitions of calibration errors, estimators of these errors, calibration of neural networks. Then I will talk about developments that occured in understanding of calibration in 2019 in depth. I want to focus on unbiased calibration estimators and hypothesis testing for calibration in particular. If we have time after that, we may talk about calibration of regression and differentiable calibration losses in neural network training.

0:06:32

0:06:32

0:05:00

0:05:00

0:10:23

0:10:23

0:15:20

0:15:20

0:21:31

0:21:31

0:11:52

0:11:52

0:19:45

0:19:45

1:28:13

1:28:13

0:00:27

0:00:27

1:16:14

1:16:14

0:17:18

0:17:18

0:09:35

0:09:35

0:27:18

0:27:18

0:21:25

0:21:25

0:03:45

0:03:45

0:27:51

0:27:51

0:46:23

0:46:23

0:03:30

0:03:30

0:01:00

0:01:00

0:00:37

0:00:37

0:12:24

0:12:24

0:14:24

0:14:24

0:02:30

0:02:30

0:01:34

0:01:34