filmov

tv

Discover LlamaIndex: Ask Complex Queries over Multiple Documents

Показать описание

In this video, we show how to ask complex comparison queries over multiple documents with LlamaIndex. Specifically, we show how to use our SubQuestionQueryEngine object which can break down complex queries into a query plan over subsets of documents.

Discover LlamaIndex: Ask Complex Queries over Multiple Documents

Discover LlamaIndex: Joint Text to SQL and Semantic Search

Introduction to Query Pipelines (Building Advanced RAG, Part 1)

LLMs for Advanced Question-Answering over Tabular/CSV/SQL Data (Building Advanced RAG, Part 2)

Discover LlamaIndex: Bottoms-Up Development with LLMs (Part 3, Evaluation)

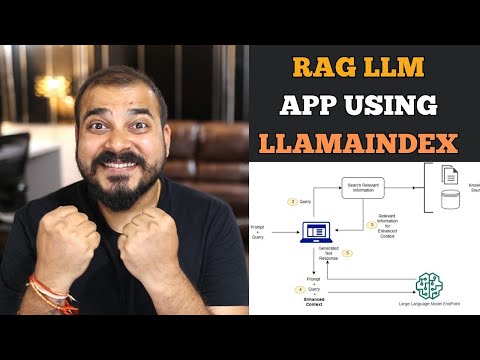

End to end RAG LLM App Using Llamaindex and OpenAI- Indexing and Querying Multiple pdf's

What is Llama Index? how does it help in building LLM applications? #languagemodels #chatgpt

Discover LlamaIndex: Bottoms-Up Development With LLMs (Part 2, Documents and Metadata)

Discover LlamaIndex: Document Management

Build Agents from Scratch (Building Advanced RAG, Part 3)

High-performance RAG with LlamaIndex

Adding Agentic Layers to RAG

'I want Llama3 to perform 10x with my private knowledge' - Local Agentic RAG w/ llama3

Discover LlamaIndex: Bottoms-Up Development with LLMs (Part 5, Retrievers + Node Postprocessors)

Step-by-Step Guide to Building a RAG LLM App with LLamA2 and LLaMAindex

How to Build an LLM Query Engine in 10 Minutes | LlamaIndex x Ray Crossover

LlamaIndex Webinar: Advanced RAG with Knowledge Graphs (with Tomaz from Neo4j)

Advanced RAG with ColBERT in LangChain and LlamaIndex

LlamaIndex Webinar: Advanced Tabular Data Understanding with LLMs

LlamaIndex Webinar: Document Metadata and Local Models for Better, Faster Retrieval

LlamaIndex Sessions: 12 RAG Pain Points and Solutions

NL2SQL with LlamaIndex: Querying Databases Using Natural Language | Code

Discover LlamaIndex: Custom Tools for Data Agents

Mastering LlamaIndex : Create, Save & Load Indexes, Customize LLMs, Prompts & Embeddings | C...

Комментарии

0:08:48

0:08:48

0:20:08

0:20:08

0:33:01

0:33:01

0:35:07

0:35:07

0:16:19

0:16:19

0:27:21

0:27:21

0:00:39

0:00:39

0:08:42

0:08:42

0:11:14

0:11:14

0:31:16

0:31:16

0:59:37

0:59:37

0:19:40

0:19:40

0:24:02

0:24:02

0:12:02

0:12:02

0:24:09

0:24:09

0:10:19

0:10:19

0:53:32

0:53:32

0:13:35

0:13:35

0:55:02

0:55:02

0:52:48

0:52:48

0:37:57

0:37:57

0:19:51

0:19:51

0:13:54

0:13:54

0:23:56

0:23:56