filmov

tv

Create a table for a Kafka stream using Python Faust

Показать описание

A Kafka table is a distributed in-memory dictionary, backed by a Kafka changelog topic used for persistence and fault-tolerance.

This video explains how to work with Faust Table in-depth .

Code is available in the pinned comment .

Check this playlist for more Data Engineering related videos:

Apache Kafka form scratch

Snowflake Complete Course from scratch with End-to-End Project with in-depth explanation--

🙏🙏🙏🙏🙏🙏🙏🙏

YOU JUST NEED TO DO

3 THINGS to support my channel

LIKE

SHARE

&

SUBSCRIBE

TO MY YOUTUBE CHANNEL

This video explains how to work with Faust Table in-depth .

Code is available in the pinned comment .

Check this playlist for more Data Engineering related videos:

Apache Kafka form scratch

Snowflake Complete Course from scratch with End-to-End Project with in-depth explanation--

🙏🙏🙏🙏🙏🙏🙏🙏

YOU JUST NEED TO DO

3 THINGS to support my channel

LIKE

SHARE

&

SUBSCRIBE

TO MY YOUTUBE CHANNEL

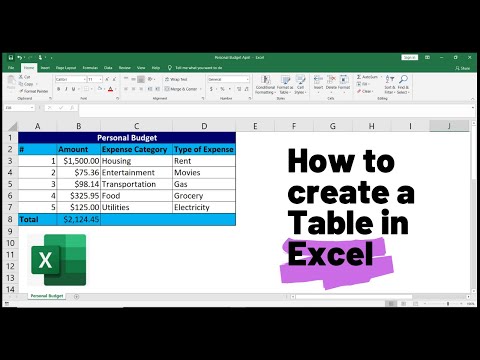

How to Create a Table in Excel (Spreadsheet Basics)

How to create a table in Excel | Shortcut to create table

How do you create a table in HTML?To create table in HTML,

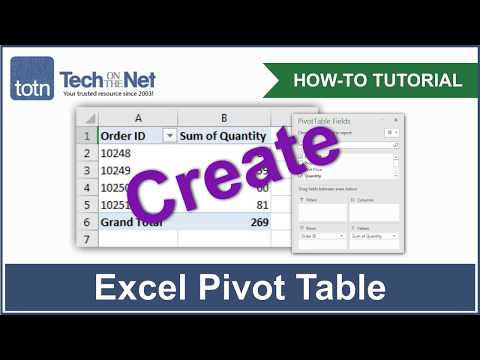

How to create a Pivot Table in Excel

How To Create An Excel Table

Excel Quick Tips - How to create a data table using keyboard shortcuts

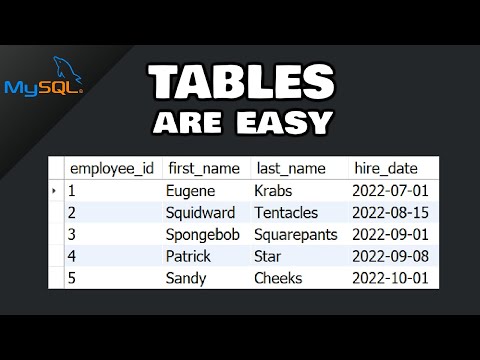

MySQL: How to create a TABLE

How to Create a Table in Excel

Making A Folding Table For Iron.#trending #viralshorts #Najaar #viralreels

SAP - ABAP - Steps to Create A Table

How to Create a Table | Excel Tutorial for Beginners

Create Table With Columns Using Python

How To Create Table In Ms Word | Short Method To Insert Table| #short #word #exceltutorial

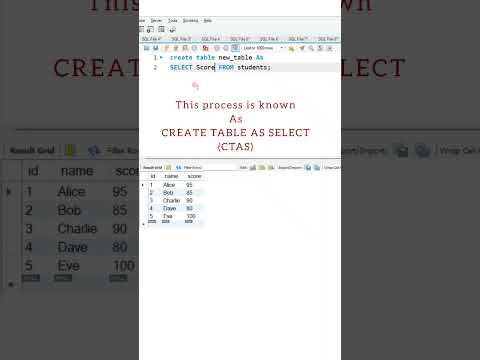

create table as select in MySQL database #shorts #mysql #database

25: Table In HTML and CSS | How To Create Tables | Learn HTML and CSS | HTML Tutorial | CSS Tutorial

HOW to create table in html./learn table tags.

How To Create A Table Of Figures In Word (& Table Of Tables!)

Create Automatic Table of Content in Word #excel#tutorial #word #table #officestarterkit

How To Create Simple Table On Figma

Access 2016 - Creating Tables - How To Create a New Table in Microsoft MS Design & Datasheet Vie...

CREATE TABLE Statement (SQL) - Creating Database Tables

How to Create a Pivot Table in Excel

How to CREATE a Table in Adobe InDesign (Step by Step) 2025

Create Table in Excel shortcut keys

Комментарии

0:03:42

0:03:42

0:00:19

0:00:19

0:00:21

0:00:21

0:02:15

0:02:15

0:00:29

0:00:29

0:00:44

0:00:44

0:08:10

0:08:10

0:00:48

0:00:48

0:00:45

0:00:45

0:12:16

0:12:16

0:00:38

0:00:38

0:00:13

0:00:13

0:00:26

0:00:26

0:00:18

0:00:18

0:10:01

0:10:01

0:00:16

0:00:16

0:06:23

0:06:23

0:00:34

0:00:34

0:05:41

0:05:41

0:06:06

0:06:06

0:02:25

0:02:25

0:00:55

0:00:55

0:01:29

0:01:29

0:00:24

0:00:24