filmov

tv

Mozilla Explains: Bias in AI Training Data

Показать описание

How can artificial intelligence be biased? Bias in artificial intelligence is when a machine gives consistently different outputs for one group of people when compared to another. Typically these biased outputs follow classic human societal biases like race, gender, biological sex, nationality, or age.

Biases can be as a result of assumptions made by the engineers who developed the AI, or they can be as a result of prejudices in the training data that taught the AI, which is what Johann Diedrick explains in the latest edition of Mozilla Explains.

Biases can be as a result of assumptions made by the engineers who developed the AI, or they can be as a result of prejudices in the training data that taught the AI, which is what Johann Diedrick explains in the latest edition of Mozilla Explains.

Mozilla Explains: Bias in AI Training Data

Who benefits from AI Art? Mozilla Explains: biased AI outputs.

Mozilla explains bias in ai training data

Would an algorithm hire you?

What is Artificial Intelligence? Mozilla Explains.

How does AI make decisions? Mozilla Explains: Why AI Needs to Explain Itself

D.R.E.A.M. Debugging Bias in Artificial Intelligence with Margaret Mitchell

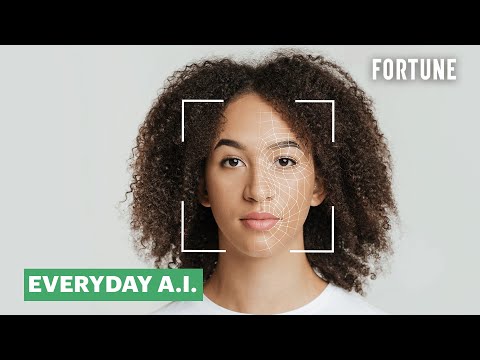

How To Eliminate Racial Bias In Artificial Intelligence | Everyday A.I.

Responding to Coded Bias: Black Women Interrogating AI

What is an Ethical Artificial Intelligence? Mozilla Explains

Gender Bias in AI and Machine Learning Systems

Uncovering the Biases in AI What You Didnt Know

Is YouTube Watching Me? Mozilla Explains: Recommendation Engines

MozFest 2021: Bias Reel

Alexa doesn't work for you, it works for Amazon. Mozilla Explains: The Future of Personal AIs (...

Bias in AI? It's just a reflection of human bias

Ethics of AI Bias (full video)

What is The Digital Divide? Mozilla Explains

The Dangerous Impact of Bias in AI Education Systems

Is AI fair (Algorithmic bias)

Bias & A.I

Why We Need Ethics in Artificial Intelligence (AI) | NXP Explains

Why we need to expose AI's hidden biases: Explaining Explainable AI

What is the future of data and AI — and what role will Mozilla play?

Комментарии

0:04:51

0:04:51

0:02:38

0:02:38

0:17:28

0:17:28

0:02:15

0:02:15

0:02:50

0:02:50

0:02:50

0:02:50

0:06:41

0:06:41

0:04:20

0:04:20

1:07:00

1:07:00

0:01:29

0:01:29

0:08:43

0:08:43

0:00:39

0:00:39

0:04:02

0:04:02

0:08:52

0:08:52

0:04:51

0:04:51

0:01:38

0:01:38

0:45:45

0:45:45

0:03:58

0:03:58

0:00:40

0:00:40

0:07:42

0:07:42

0:01:17

0:01:17

0:03:31

0:03:31

0:00:36

0:00:36

1:02:25

1:02:25