filmov

tv

Advancing Spark - Understanding Low Shuffle Merge

Показать описание

Back in Databricks Runtime 9.0 we saw the introduction of a preview "Low Shuffle Merge" feature, but it seemed to go fairly unnoticed. In DBR 10.4, it's now enabled by default and a fully GA part of the platform... but what does it actually do?

In this video, Simon walks through the theory of low shuffle merge, and what you should expect to see happening to both your runtime executions, but also the data layout before and after the change. Make no mistake, it's a real speed boost to many common patterns, so use it if you can!

And as always, get in touch with Advancing Analytics if you need help on your Lakehouse journey

In this video, Simon walks through the theory of low shuffle merge, and what you should expect to see happening to both your runtime executions, but also the data layout before and after the change. Make no mistake, it's a real speed boost to many common patterns, so use it if you can!

And as always, get in touch with Advancing Analytics if you need help on your Lakehouse journey

Advancing Spark - Understanding Low Shuffle Merge

Advancing Spark - Low-Code Pandas with Databricks Bamboolib

Advancing Spark - Understanding the Spark UI

Tech Tip - What is Spark Advance

Advancing Spark - Understanding Terraform

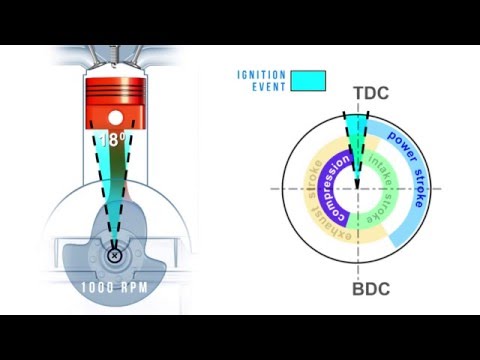

IGNITION TIMING SIMPLIFIED | The secrets of spark tuning revealed

Apache Spark in 60 Seconds

Advancing Spark - DLT Updates & Enhanced Autoscaling

SPARC Additive Manufacturing workshop - Advanced Ceramics for Sustainability - IIT Madras

Advancing Spark - Autoloader Resource Management

Advancing Spark - Engineering behind Featurestore

Advancing Spark - Self-Paced Spark Training Now Available!

Advancing Spark - How to pass the Spark 3.0 accreditation!

5 Ways to Ruin Spark Advance

Advancing Spark - Databricks Delta Change Feed

Advancing Spark - Data + AI Summit 2022 Day 1 Recap

Advancing Spark - Getting Started with Ganglia in Databricks

Advancing Spark - Data Lakehouse Star Schemas with Dynamic Partition Pruning!

Advancing Spark - Dynamic Data Decryption

Setting Ignition Timing Video - Advance Auto Parts

Apache Spark Core—Deep Dive—Proper Optimization Daniel Tomes Databricks

Advancing Spark - Configuring Azure Databricks Spot VM Clusters

Learn Apache Spark in 10 Minutes | Step by Step Guide

Spark Timing & Dwell Control Training Module Trailer

Комментарии

0:18:51

0:18:51

0:16:50

0:16:50

0:30:19

0:30:19

0:01:53

0:01:53

0:23:00

0:23:00

0:05:24

0:05:24

0:01:00

0:01:00

0:13:45

0:13:45

6:40:09

6:40:09

0:22:11

0:22:11

0:19:20

0:19:20

0:10:43

0:10:43

0:20:01

0:20:01

0:07:38

0:07:38

0:17:01

0:17:01

0:18:17

0:18:17

0:24:49

0:24:49

0:18:00

0:18:00

0:15:37

0:15:37

0:09:02

0:09:02

1:30:18

1:30:18

0:20:42

0:20:42

0:10:47

0:10:47

0:01:53

0:01:53