filmov

tv

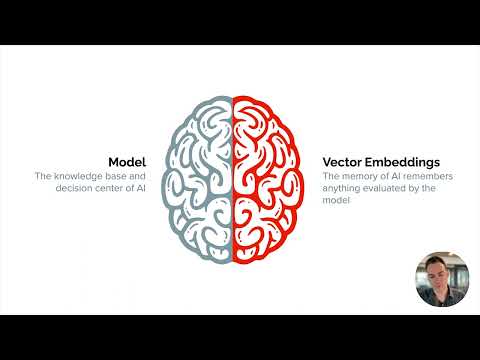

Embeddings: What they are and why they matter

Показать описание

Embeddings: What they are and why they matter

A Complete Overview of Word Embeddings

The Biggest Misconception about Embeddings

Embeddings

Vector Databases simply explained! (Embeddings & Indexes)

Word Embedding and Word2Vec, Clearly Explained!!!

Vectoring Words (Word Embeddings) - Computerphile

What Are Word and Sentence Embeddings?

Claris Community Live 7: Make the impossible easy with AI and Claris FileMaker 2024

A Beginner's Guide to Vector Embeddings

What are word embeddings?

Word Embeddings || Embedding Layers || Quick Explained

Word Embeddings in 60 Seconds for NLP AI & ChatGPT

Text embeddings & semantic search

Word Embeddings - EXPLAINED!

OpenAI Embeddings and Vector Databases Crash Course

What are Text Embeddings? (Word Embedding Explained))

The Unsung Hero of AI: Vector Embeddings

Whats a Vector Embedding???

Word Embeddings

Understanding embeddings in one minute #GenerativeAI

What Are Vector Embeddings? 🤷♂️ #ai #aidevelopment #chatgpt #webdev #webdevelopment

What are AI vector embeddings?

Understanding Word Embedding|What is Word Embedding|Word Embedding in Natural language processing

Комментарии

0:38:38

0:38:38

0:17:17

0:17:17

0:04:43

0:04:43

0:01:22

0:01:22

0:04:23

0:04:23

0:16:12

0:16:12

0:16:56

0:16:56

0:08:24

0:08:24

0:59:50

0:59:50

0:08:29

0:08:29

0:01:00

0:01:00

0:02:09

0:02:09

0:01:09

0:01:09

0:03:30

0:03:30

0:10:06

0:10:06

0:18:41

0:18:41

0:08:56

0:08:56

0:00:57

0:00:57

0:00:37

0:00:37

0:14:28

0:14:28

0:00:59

0:00:59

0:01:00

0:01:00

0:05:59

0:05:59

0:07:09

0:07:09