filmov

tv

Understanding Java Base64 Encoding and Avoiding Data Loss

Показать описание

Discover how to properly handle `Java Base64 encoding`, ensuring no loss of data during byte to string conversions. Learn best practices for encoding and decoding binary data in Java.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Java Base64 encoding loss of data

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Understanding Java Base64 Encoding and Avoiding Data Loss

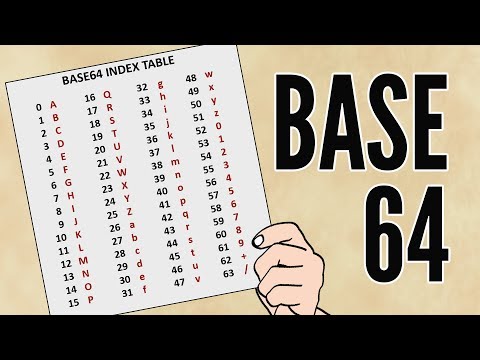

Base64 encoding is a widely-used method for converting binary data into an ASCII string format, which is particularly useful for transmitting data over media that handle textual data. However, developers often face questions about the potential for data loss when encoding and then decoding data in Java.

One such question that arises is whether we can guarantee that the original binary data will remain intact after going through the Base64 encoding and decoding process. Let's break down the scenario and ensure that we can do this without any loss of information.

The Question at Hand

In the provided code snippet, a Certificate object is encoded to a String using Base64, and then later decoded back to a byte array:

[[See Video to Reveal this Text or Code Snippet]]

The concern is whether data will remain equal to newData after these transformations.

The Solution: Avoiding Data Loss in Encoding

1. Understanding the Default Encoding

2. ASCII Compatibility in Base64 Output

Fortunately, Base64 encoding generates output comprised solely of ASCII characters. On modern platforms, such as Android, Mac OS X, and most Linux distributions, the default encoding is typically compatible with ASCII. However, systems like Windows may use a different code-page for character representation, which could potentially introduce issues when dealing with non-ASCII characters.

3. Using Explicit Character Encodings

To ensure that the encoding and decoding process is robust and free from any surprises, it's best to explicitly specify a character encoding when creating a String from byte data. The recommended approach is to use StandardCharsets.US_ASCII:

[[See Video to Reveal this Text or Code Snippet]]

Why Choose US_ASCII?

Correctness: US_ASCII accurately represents all characters that will be generated by Base64 encoding.

Compatibility: Since Base64 outputs ASCII, using an ASCII-compatible encoding lowers the chance of introducing issues through different platforms and environments.

4. Other Encoding Options

While US_ASCII is the safest option for Base64 output, alternatives like ISO_8859_1 and UTF_8 can also be considered. However, they might not be as foolproof as US_ASCII since they include more characters beyond the ASCII range:

ISO_8859_1: Supports Western European characters but includes non-ASCII characters.

UTF_8: A versatile encoding that can represent characters from all languages but may introduce complications if non-ASCII output is encountered.

Conclusion

When you're dealing with Base64 encoding and decoding in Java, it's imperative to be cautious about character encodings to prevent unintended data loss. Always ensure you specify a character set, with StandardCharsets.US_ASCII being the ideal choice. Following this guideline will help ensure that your data remains intact as it transitions between byte arrays and strings.

By adhering to these best practices, you can confidently handle binary data in your Java applications, secure in the knowledge that your data will be accurately preserved through Base64 transformations.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Java Base64 encoding loss of data

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Understanding Java Base64 Encoding and Avoiding Data Loss

Base64 encoding is a widely-used method for converting binary data into an ASCII string format, which is particularly useful for transmitting data over media that handle textual data. However, developers often face questions about the potential for data loss when encoding and then decoding data in Java.

One such question that arises is whether we can guarantee that the original binary data will remain intact after going through the Base64 encoding and decoding process. Let's break down the scenario and ensure that we can do this without any loss of information.

The Question at Hand

In the provided code snippet, a Certificate object is encoded to a String using Base64, and then later decoded back to a byte array:

[[See Video to Reveal this Text or Code Snippet]]

The concern is whether data will remain equal to newData after these transformations.

The Solution: Avoiding Data Loss in Encoding

1. Understanding the Default Encoding

2. ASCII Compatibility in Base64 Output

Fortunately, Base64 encoding generates output comprised solely of ASCII characters. On modern platforms, such as Android, Mac OS X, and most Linux distributions, the default encoding is typically compatible with ASCII. However, systems like Windows may use a different code-page for character representation, which could potentially introduce issues when dealing with non-ASCII characters.

3. Using Explicit Character Encodings

To ensure that the encoding and decoding process is robust and free from any surprises, it's best to explicitly specify a character encoding when creating a String from byte data. The recommended approach is to use StandardCharsets.US_ASCII:

[[See Video to Reveal this Text or Code Snippet]]

Why Choose US_ASCII?

Correctness: US_ASCII accurately represents all characters that will be generated by Base64 encoding.

Compatibility: Since Base64 outputs ASCII, using an ASCII-compatible encoding lowers the chance of introducing issues through different platforms and environments.

4. Other Encoding Options

While US_ASCII is the safest option for Base64 output, alternatives like ISO_8859_1 and UTF_8 can also be considered. However, they might not be as foolproof as US_ASCII since they include more characters beyond the ASCII range:

ISO_8859_1: Supports Western European characters but includes non-ASCII characters.

UTF_8: A versatile encoding that can represent characters from all languages but may introduce complications if non-ASCII output is encountered.

Conclusion

When you're dealing with Base64 encoding and decoding in Java, it's imperative to be cautious about character encodings to prevent unintended data loss. Always ensure you specify a character set, with StandardCharsets.US_ASCII being the ideal choice. Following this guideline will help ensure that your data remains intact as it transitions between byte arrays and strings.

By adhering to these best practices, you can confidently handle binary data in your Java applications, secure in the knowledge that your data will be accurately preserved through Base64 transformations.

0:04:12

0:04:12

0:03:33

0:03:33

0:00:52

0:00:52

0:01:32

0:01:32

0:04:22

0:04:22

0:05:12

0:05:12

0:03:42

0:03:42

0:02:02

0:02:02

0:08:11

0:08:11

0:03:29

0:03:29

0:09:44

0:09:44

0:08:45

0:08:45

0:01:00

0:01:00

0:09:54

0:09:54

0:10:58

0:10:58

0:13:19

0:13:19

0:00:39

0:00:39

0:04:25

0:04:25

0:06:33

0:06:33

0:00:34

0:00:34

0:00:45

0:00:45

0:10:05

0:10:05

0:06:37

0:06:37

0:06:40

0:06:40