filmov

tv

Optimizing FastAPI for Concurrent Users when Running Hugging Face ML Models

Показать описание

To serve multiple concurrent users accessing FastAPI endpoint running Hugging Face API, you must start the FastAPI app with several workers. It will ensure current user requests will not be blocked if another request is already running. I show and describe it in this video.

Sparrow - data extraction from documents with ML:

0:00 Introduction

0:30 Concurrency

2:50 Problem Example

4:10 Code and Solution

6:10 Summary

CONNECT:

- Subscribe to this YouTube channel

#python #fastapi #machinelearning

Sparrow - data extraction from documents with ML:

0:00 Introduction

0:30 Concurrency

2:50 Problem Example

4:10 Code and Solution

6:10 Summary

CONNECT:

- Subscribe to this YouTube channel

#python #fastapi #machinelearning

Optimizing FastAPI for Concurrent Users when Running Hugging Face ML Models

How to Make 2500 HTTP Requests in 2 Seconds with Async & Await

Performance tips by the FastAPI Expert — Marcelo Trylesinski

How FastAPI Handles Requests Behind the Scenes

Optimizing ML Model Loading Time Using LRU Cache in FastAPI 📈

Async in practice: how to achieve concurrency in FastAPI (and what to avoid doing!)

How I scaled a website to 10 million users (web-servers & databases, high load, and performance)

How REST APIs support upload of huge data and long running processes | Asynchronous REST API

Concurrent Request Handling comparison: Express Vs Fast API Vs Play Framework Vs Go Gin

Cache Systems Every Developer Should Know

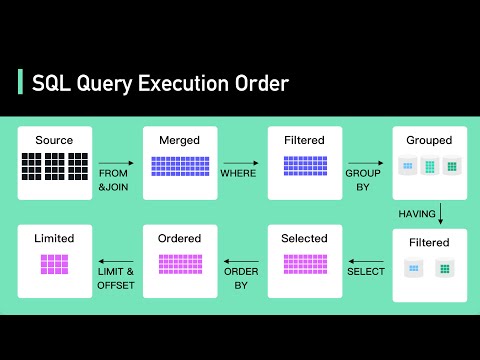

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

Async is used to optimize the execution of independent python function #shorts

FastAPI: Hitting the Performance Jackpot - Maciej Marzęta

PYTHON : How to do multiprocessing in FastAPI

How to make your Node.js API 5x faster!

Massively Speed Up Requests with HTTPX in Python

FastAPI with Docker in 1 Minute ⚡

Why do we need middleware in FASTapi?

RabbitMQ in 100 Seconds

How Much Memory for 1,000,000 Threads in 7 Languages | Go, Rust, C#, Elixir, Java, Node, Python

Want Faster HTTP Requests? Use A Session with Python!

Why node.js is the wrong choice for APIs (and what to use instead)

FastAPI Background Tasks for Non-Blocking Endpoints

Requests vs HTTPX vs Aiohttp | Which One to Pick?

Комментарии

0:07:14

0:07:14

0:04:27

0:04:27

0:24:59

0:24:59

0:05:09

0:05:09

0:06:33

0:06:33

0:40:44

0:40:44

0:13:04

0:13:04

0:09:20

0:09:20

0:13:15

0:13:15

0:05:48

0:05:48

0:05:57

0:05:57

0:00:57

0:00:57

0:28:09

0:28:09

0:01:17

0:01:17

0:16:39

0:16:39

0:07:34

0:07:34

0:01:00

0:01:00

0:02:43

0:02:43

0:02:31

0:02:31

0:26:21

0:26:21

0:07:16

0:07:16

0:05:48

0:05:48

0:08:38

0:08:38

0:15:11

0:15:11