filmov

tv

Accelerate Transformer inference on GPU with Optimum and Better Transformer

Показать описание

In this video, I show you how to accelerate Transformer inference with Optimum, an open-source library by Hugging Face, and Better Transformer, a PyTorch extension available since PyTorch 1.12.

Using an AWS instance equipped with an NVIDIA V100 GPU, I start from a couple of models that I previously fine-tuned: a DistilBERT model for text classification and a Vision Transformer model for image classification. I first benchmark the original models, then I use Optimum and Better Transformer to optimize them with a single line of code, and I benchmark them again. This simple process delivers a 20-30% percent speedup with no accuracy drop!

⭐️⭐️⭐️ Don't forget to subscribe to be notified of future videos ⭐️⭐️⭐️

Using an AWS instance equipped with an NVIDIA V100 GPU, I start from a couple of models that I previously fine-tuned: a DistilBERT model for text classification and a Vision Transformer model for image classification. I first benchmark the original models, then I use Optimum and Better Transformer to optimize them with a single line of code, and I benchmark them again. This simple process delivers a 20-30% percent speedup with no accuracy drop!

⭐️⭐️⭐️ Don't forget to subscribe to be notified of future videos ⭐️⭐️⭐️

Accelerate Transformer inference on GPU with Optimum and Better Transformer

Accelerate Big Model Inference: How Does it Work?

Supercharge your PyTorch training loop with Accelerate

How to run Large AI Models from Hugging Face on Single GPU without OOM

Accelerate Transformer inference on CPU with Optimum and ONNX

Nvidia CUDA in 100 Seconds

Handling Heavy-tailed Input of Transformer Inference on GPUs

'High-Performance Training and Inference on GPUs for NLP Models' - Lei Li

Better Transformer: Accelerating Transformer Inference in PyTorch at PyTorch Conference 2022

Hugging Face Infinity - GPU Walkthrough

Accelerate and Autoscale Deep Learning Inference on GPUs with KFServing - Dan Sun

How to setup NVIDIA GPU for PyTorch on Windows 10/11

Accelerate Transformer inference on CPU with Optimum and Intel OpenVINO

Accelerating Stable Diffusion Inference on Intel CPUs with Hugging Face (part 1) 🚀 🚀 🚀

Hardware acceleration for on-device Machine Learning

PyTorch 2.0 Q&A: Optimizing Transformers for Inference

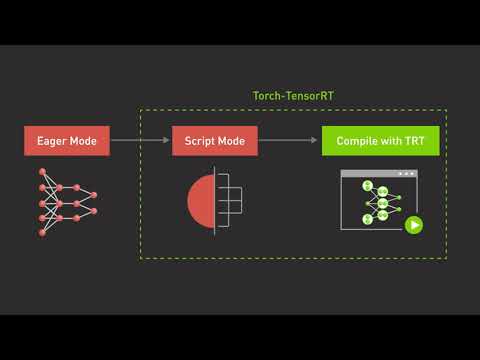

Getting Started with NVIDIA Torch-TensorRT

Supercharge your PyTorch training loop with 🤗 Accelerate

Run 70Bn Llama 3 Inference on a Single 4GB GPU

How to Load Large Hugging Face Models on Low-End Hardware | CoLab | HF | Karndeep Singh

Nvidia H100 GPU Explained in 60 Seconds | CUDA | Tensor | HPC | HBM3 #new #ai #technology #shorts

Introducing Accelerate & PEFT to Democratize LLM:Training & Inference LLM With Less Hardware

Herbie Bradley – EleutherAI – Speeding up inference of LLMs with Triton and FasterTransformer

Pipeline parallel inference with Hugging Face Accelerate

Комментарии

0:01:08

0:01:08

0:03:20

0:03:20

0:14:11

0:14:11

0:16:32

0:16:32

0:03:13

0:03:13

0:24:05

0:24:05

0:13:22

0:13:22

0:08:17

0:08:17

0:04:14

0:04:14

0:37:24

0:37:24

0:13:14

0:13:14

0:12:54

0:12:54

0:09:08

0:09:08

0:15:52

0:15:52

1:01:45

1:01:45

0:01:56

0:01:56

0:12:53

0:12:53

0:08:18

0:08:18

0:09:45

0:09:45

0:00:59

0:00:59

0:50:50

0:50:50

0:10:02

0:10:02

0:29:12

0:29:12