filmov

tv

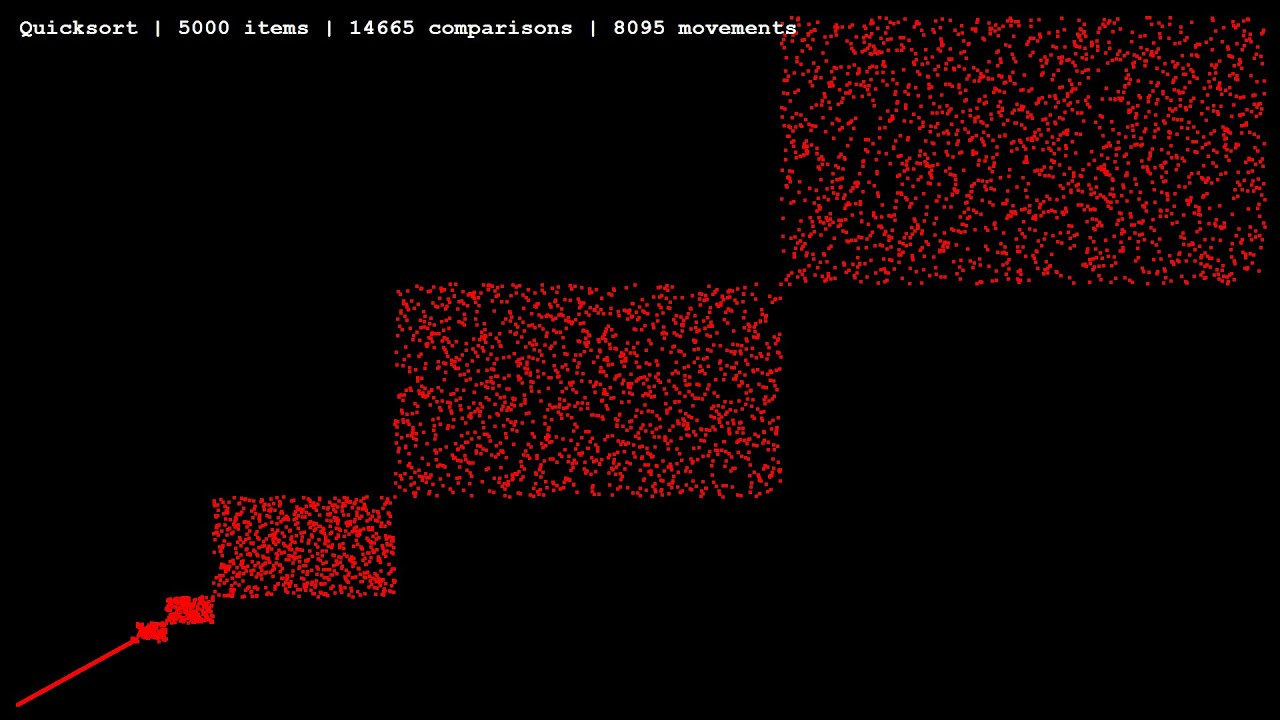

Sorting Algorithms: Quicksort

Показать описание

Developed in 1960 by Tony Hoare, Quicksort is a divide-and-conquer sorting algorithm. Quicksort is shown here sorting arrays of 25, 100, & 5000 elements.

"CGI Snake" written & performed by Chris Zabriskie

"CGI Snake" written & performed by Chris Zabriskie

Quick sort in 4 minutes

Quick Sort Algorithm

Learn Quick Sort in 13 minutes ⚡

Quick Sort Algorithm Explained!

Quicksort Algorithmus / Quick Sort Sortierverfahren mit Beispiel (deutsch)

2.8.1 QuickSort Algorithm

Quick Sort - Computerphile

Quicksort Sort Algorithm in Java - Full Tutorial With Source

Sorting Algorithms | By Gurmeet Singh | Intuition | Algorithm #sortingAlgorithms #fastforwardcoders

7.6 Quick Sort in Data Structure | Sorting Algorithm | DSA Full Course

Quick Sort (LR pointers)

Quicksort: Partitioning an array

Quick Sort #animation

L-3.1: How Quick Sort Works | Performance of Quick Sort with Example | Divide and Conquer

Quicksort vs Mergesort in 35 Seconds

QUICK SORT | Sorting Algorithms | DSA | GeeksforGeeks

Quicksort

Quicksort Algorithm: A Step-by-Step Visualization

Quicksort algorithm

The Quicksort Sorting Algorithm: Pick A Pivot, Partition, & Recurse

Algorithms: Quicksort

Visualization of Quick sort (HD)

Quick Sort | Animation | Coddict

15 Sorting Algorithms in 6 Minutes

Комментарии

0:04:24

0:04:24

0:03:27

0:03:27

0:13:49

0:13:49

0:00:59

0:00:59

0:04:42

0:04:42

0:13:43

0:13:43

0:03:23

0:03:23

0:24:58

0:24:58

0:00:34

0:00:34

0:24:43

0:24:43

0:00:44

0:00:44

0:04:48

0:04:48

0:00:41

0:00:41

0:13:27

0:13:27

0:00:40

0:00:40

0:03:05

0:03:05

0:03:23

0:03:23

0:09:32

0:09:32

0:20:39

0:20:39

0:26:31

0:26:31

0:08:54

0:08:54

0:03:12

0:03:12

0:00:42

0:00:42

0:05:50

0:05:50