filmov

tv

Neural networks [5.4] : Restricted Boltzmann machine - contrastive divergence

Показать описание

Neural networks [5.1] : Restricted Boltzmann machine - definition

Restricted Boltzmann Machine | Neural Network Tutorial | Deep Learning Tutorial | Edureka

Neural networks [5.3] : Restricted Boltzmann machine - free energy

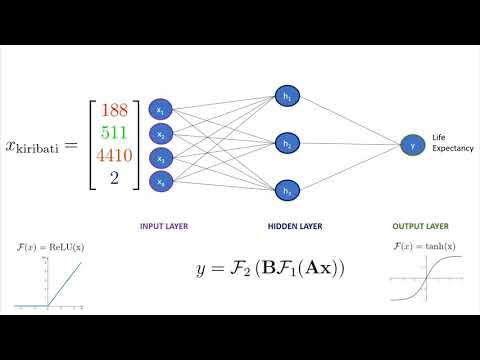

But what is a neural network? | Chapter 1, Deep learning

Restricted Boltzmann Machines in 60 seconds!

Introduction to Boltzmann Machines

[4/5] Rémi Monasson (2018) Unsupervised neural networks: from theory to systems biology

Deep Neural Network

Neural networks [5.5] : Restricted Boltzmann machine - contrastive divergence (parameter update)

An Old Problem - Ep. 5 (Deep Learning SIMPLIFIED)

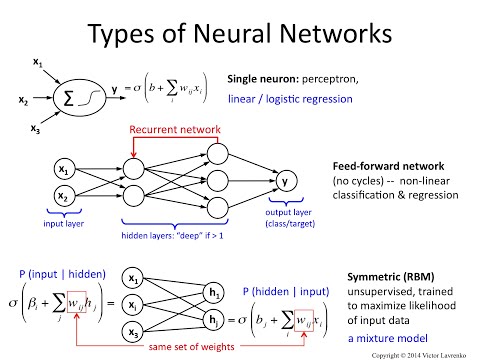

Neural Networks 5: feedforward, recurrent and RBM

Deep Neural Network (DNN) | Deep Learning

The Boltzmann Machine – The Most Important Energy-Based Neural Network #shorts

Restricted Boltzmann Machines (RBM) - A friendly introduction

Deep Learning Part - II (CS7015): Lec 18.3 Restricted Boltzmann Machines

Deep Neural Network Introduction

NEVER buy from the Dark Web.. #shorts

Feed Forward Network In Artificial Neural Network Explained In Hindi

What is a Neural Network | Neural Networks Explained in 7 Minutes | Edureka

Lecture 15 | (4/5) Recurrent Neural Networks

Applied Deep Learning 2022 - Lecture 4 - Recurrent Neural Networks

Neural networks [9.10] : Computer vision - convolutional RBM

Attention mechanism: Overview

Gradient descent, how neural networks learn | Chapter 2, Deep learning

Комментарии

![Neural networks [5.1]](https://i.ytimg.com/vi/p4Vh_zMw-HQ/hqdefault.jpg) 0:12:17

0:12:17

0:12:12

0:12:12

![Neural networks [5.3]](https://i.ytimg.com/vi/e0Ts_7Y6hZU/hqdefault.jpg) 0:12:54

0:12:54

0:18:40

0:18:40

0:01:07

0:01:07

0:08:56

0:08:56

![[4/5] Rémi Monasson](https://i.ytimg.com/vi/mAc7zP64z2o/hqdefault.jpg) 1:42:16

1:42:16

0:01:58

0:01:58

![Neural networks [5.5]](https://i.ytimg.com/vi/wMb7cads0go/hqdefault.jpg) 0:11:10

0:11:10

0:05:25

0:05:25

0:04:56

0:04:56

0:05:32

0:05:32

0:00:58

0:00:58

0:36:58

0:36:58

0:16:10

0:16:10

0:01:56

0:01:56

0:00:46

0:00:46

0:03:54

0:03:54

0:07:34

0:07:34

1:20:43

1:20:43

1:05:53

1:05:53

![Neural networks [9.10]](https://i.ytimg.com/vi/y0SISi_T6s8/hqdefault.jpg) 0:10:46

0:10:46

0:05:34

0:05:34

0:20:33

0:20:33