filmov

tv

Boost YOLO Inference Speed and reduce Memory Footprint using ONNX-Runtime | Part-1

Показать описание

In this stream we would convert Ultralytics YOLO models from their standard Pytorch (.pt) format to ONNX format to improve their infrerence speed and reduce their memory footprint by running them through onnx-runtime. Our goal would be to get the predictions to exactly match in both compute sessions and also benchmark the improvements. For our first video of the series we would be working with OBB (Oriented Bouding Box) models.

Boost YOLO Inference Speed and reduce Memory Footprint using ONNX-Runtime | Part-2

Boost YOLO Inference Speed and reduce Memory Footprint using ONNX-Runtime | Part-1

Boost YOLO Inference Speed and reduce Memory Footprint using ONNX-Runtime | Part-2 (Continued)

Realtime #YOLOv8 inference from an iPad 🔥

Speed Up YOLO Object Detection by 4x with Python - here is how

How to Use YOLO12 for Object Detection with the Ultralytics Package | Is YOLO12 Fast or Slow? 🚀

How to Improve YOLOv8 Accuracy and Speed 🚀🎯

Fastest YOLOv5 CPU Inference with Sparsity and DeepSparse with Mark Kurtz

Speed up your Machine Learning Models with ONNX

Optimize Your AI - Quantization Explained

How To Export and Optimize an Ultralytics YOLOv8 Model for Inference with OpenVINO | Episode 9

Speed Estimation & Vehicle Tracking | Computer Vision | Open Source

Performance Metrics Ultralytics YOLOv8 | MAP, F1 Score, Precision, IOU & Accuracy | Episode 25

Spoiller: YOLOv8 Accuracy +21mAP / Speed Increases 4x

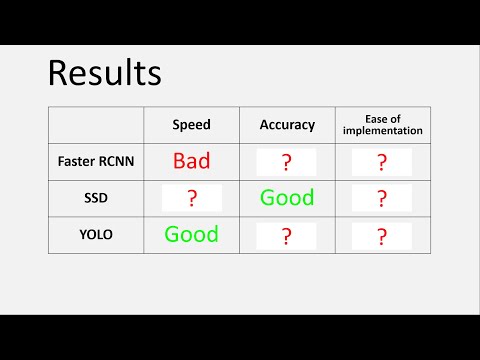

Object Detection best model / best algorithm in 2023 | YOLO vs SSD vs Faster-RCNN comparison Python

YOLO-NAS: Introducing One of The Most Efficient Object Detection Algorithms

How To Export Custom Trained Ultralytics YOLOv8 Model and Run Live Inference on Webcam 💨

Nvidia CUDA in 100 Seconds

DeepSparse - Enabling GPU Level Inference on Your CPU

Crazy Fast YOLO11 Inference with Deepstream and TensorRT on NVIDIA Jetson Orin

Ultralytics YOLO11 on NVIDIA Jetson Orin NX with DeepStream 🎉

How To Increase Inference Performance with TensorFlow-TensorRT

How to Achieve the Fastest CPU Inference Performance for Object Detection YOLO Models

Increase YOLOv4 object detection speed on GPU with TensorRT

Комментарии

0:54:37

0:54:37

2:04:22

2:04:22

1:24:29

1:24:29

0:00:12

0:00:12

0:16:31

0:16:31

0:10:31

0:10:31

0:02:26

0:02:26

0:19:15

0:19:15

0:00:33

0:00:33

0:12:10

0:12:10

0:07:28

0:07:28

0:24:33

0:24:33

0:09:18

0:09:18

0:01:36

0:01:36

0:05:07

0:05:07

0:06:18

0:06:18

0:00:17

0:00:17

0:03:13

0:03:13

0:08:56

0:08:56

0:26:50

0:26:50

0:00:58

0:00:58

0:06:18

0:06:18

0:59:10

0:59:10

0:25:52

0:25:52