filmov

tv

Spark [Hash Partition] Explained

Показать описание

Spark [Hash Partition] Explained {தமிழ்}

Video Playlist

-----------------------

YouTube channel link

Website

Technology in Tamil & English

#bigdata #hadoop #spark #apachehadoop #whatisbigdata #bigdataintroduction #bigdataonline #bigdataintamil #bigdatatamil #hadoop #hadoopframework #hive #hbase #sqoop #mapreduce #hdfs #hadoopecosystem #spark #bigdata #apachespark #hadoop #sparkmemoryconfig #executormemory #drivermemory #sparkcores #sparkexecutors #sparkmemory #sparkdeploy #sparksubmit #sparkyarn #sparklense #sparkprofiling #sparkqubole #sparkhashpartition #hashpartition

Video Playlist

-----------------------

YouTube channel link

Website

Technology in Tamil & English

#bigdata #hadoop #spark #apachehadoop #whatisbigdata #bigdataintroduction #bigdataonline #bigdataintamil #bigdatatamil #hadoop #hadoopframework #hive #hbase #sqoop #mapreduce #hdfs #hadoopecosystem #spark #bigdata #apachespark #hadoop #sparkmemoryconfig #executormemory #drivermemory #sparkcores #sparkexecutors #sparkmemory #sparkdeploy #sparksubmit #sparkyarn #sparklense #sparkprofiling #sparkqubole #sparkhashpartition #hashpartition

Spark [Hash Partition] Explained

Hash Partitioning vs Range Partitioning | Spark Interview questions

Spark Partition | Hash Partitioner | Interview Question

Spark Basics | Partitions

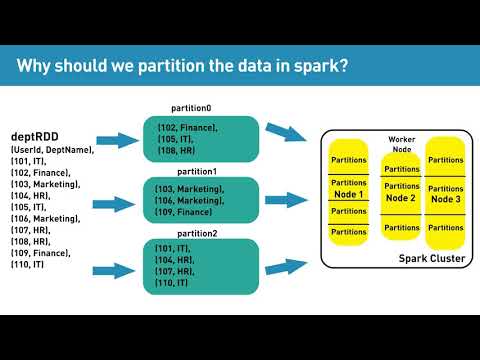

Why should we partition the data in spark?

Partition vs bucketing | Spark and Hive Interview Question

Hash Partitioning - DataStage

Spark Basics | Shuffling

#5 Spark Types of Partitioner | Range | Hash | Round Robin | Single in English

Spark Shuffle Hash Join: Spark SQL interview question

Spark Join and shuffle | Understanding the Internals of Spark Join | How Spark Shuffle works

Hash Partition in Spark with real world example code June 2023 interview

How to Screw up your Repartitioning! - Spark Partitioning (Part 11)

coalesce vs repartition vs partitionBy in spark | Interview question Explained

How do nested loop, hash, and merge joins work? Databases for Developers Performance #7

Partitioning and bucketing in Spark | Lec-9 | Practical video

12. Distributions(Hash, Round Robbin & Replicate) in Azure Synapse Analytics

Understanding how to Optimize PySpark Job | Cache | Broadcast Join | Shuffle Hash Join #interview

Spark [Custom Partition] Implementation

Apache Spark - HashPartitioner, RangePartitioner, CustomPartition, Accumulator, Broadcast Variables

Oracle Tutorial - Hash Partition

Spark Join | Sort vs Shuffle | Spark Interview Question | Lec-13

Databases: MySQL Range partitioning vs Hash partitioning

Dynamic Partition Pruning | Spark Performance Tuning

Комментарии

![Spark [Hash Partition]](https://i.ytimg.com/vi/KbaLrFgGbNw/hqdefault.jpg) 0:17:37

0:17:37

0:04:25

0:04:25

0:14:43

0:14:43

0:05:13

0:05:13

0:03:43

0:03:43

0:09:15

0:09:15

0:02:59

0:02:59

0:05:46

0:05:46

0:04:19

0:04:19

0:03:40

0:03:40

0:09:15

0:09:15

0:02:02

0:02:02

0:25:25

0:25:25

0:08:02

0:08:02

0:15:55

0:15:55

0:27:52

0:27:52

0:08:57

0:08:57

0:00:48

0:00:48

![Spark [Custom Partition]](https://i.ytimg.com/vi/KQtgwB6Djqs/hqdefault.jpg) 0:07:39

0:07:39

0:57:27

0:57:27

0:09:40

0:09:40

0:19:42

0:19:42

0:01:45

0:01:45

0:06:32

0:06:32