filmov

tv

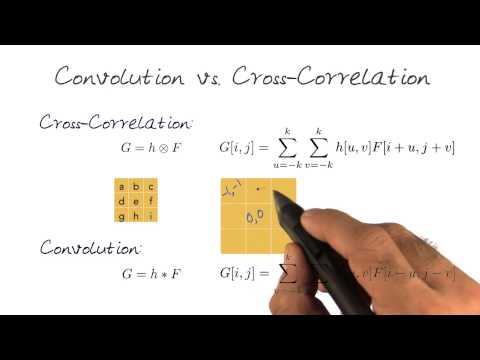

But what is a convolution?

Показать описание

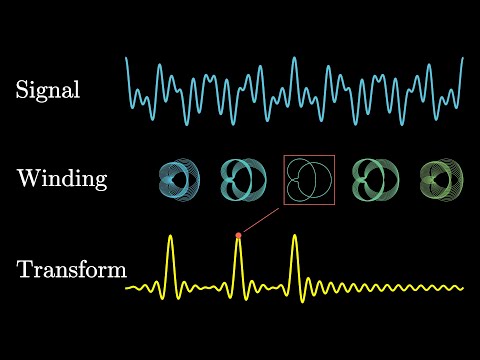

Discrete convolutions, from probability to image processing and FFTs.

An equally valuable form of support is to simply share the videos.

Other videos I referenced

Live lecture on image convolutions for the MIT Julia lab

Lecture on Discrete Fourier Transforms

Reducible video on FFTs

Veritasium video on FFTs

A small correction for the integer multiplication algorithm mentioned at the end. A “straightforward” application of FFT results in a runtime of O(N * log(n) log(log(n)) ). That log(log(n)) term is tiny, but it is only recently in 2019, Harvey and van der Hoeven found an algorithm that removed that log(log(n)) term.

Another small correction at 17:00. I describe O(N^2) as meaning "the number of operations needed scales with N^2". However, this is technically what Theta(N^2) would mean. O(N^2) would mean that the number of operations needed is at most constant times N^2, in particular, it includes algorithms whose runtimes don't actually have any N^2 term, but which are bounded by it. The distinction doesn't matter in this case, since there is an explicit N^2 term.

Thanks to these viewers for their contributions to translations

Hebrew: Omer Tuchfeld

Italian: Emanuele Vezzoli

Vietnamese: lkhphuc

--------

These animations are largely made using a custom python library, manim. See the FAQ comments here:

You can find code for specific videos and projects here:

Music by Vincent Rubinetti.

Download the music on Bandcamp:

Stream the music on Spotify:

Timestamps

0:00 - Where do convolutions show up?

2:07 - Add two random variables

6:28 - A simple example

7:25 - Moving averages

8:32 - Image processing

13:42 - Measuring runtime

14:40 - Polynomial multiplication

18:10 - Speeding up with FFTs

21:22 - Concluding thoughts

------------------

Various social media stuffs:

An equally valuable form of support is to simply share the videos.

Other videos I referenced

Live lecture on image convolutions for the MIT Julia lab

Lecture on Discrete Fourier Transforms

Reducible video on FFTs

Veritasium video on FFTs

A small correction for the integer multiplication algorithm mentioned at the end. A “straightforward” application of FFT results in a runtime of O(N * log(n) log(log(n)) ). That log(log(n)) term is tiny, but it is only recently in 2019, Harvey and van der Hoeven found an algorithm that removed that log(log(n)) term.

Another small correction at 17:00. I describe O(N^2) as meaning "the number of operations needed scales with N^2". However, this is technically what Theta(N^2) would mean. O(N^2) would mean that the number of operations needed is at most constant times N^2, in particular, it includes algorithms whose runtimes don't actually have any N^2 term, but which are bounded by it. The distinction doesn't matter in this case, since there is an explicit N^2 term.

Thanks to these viewers for their contributions to translations

Hebrew: Omer Tuchfeld

Italian: Emanuele Vezzoli

Vietnamese: lkhphuc

--------

These animations are largely made using a custom python library, manim. See the FAQ comments here:

You can find code for specific videos and projects here:

Music by Vincent Rubinetti.

Download the music on Bandcamp:

Stream the music on Spotify:

Timestamps

0:00 - Where do convolutions show up?

2:07 - Add two random variables

6:28 - A simple example

7:25 - Moving averages

8:32 - Image processing

13:42 - Measuring runtime

14:40 - Polynomial multiplication

18:10 - Speeding up with FFTs

21:22 - Concluding thoughts

------------------

Various social media stuffs:

Комментарии

0:23:01

0:23:01

0:05:36

0:05:36

0:05:23

0:05:23

0:05:55

0:05:55

0:19:43

0:19:43

0:03:10

0:03:10

0:36:10

0:36:10

0:07:55

0:07:55

0:00:00

0:00:00

0:04:34

0:04:34

0:08:37

0:08:37

0:00:38

0:00:38

0:18:40

0:18:40

0:21:11

0:21:11

0:24:25

0:24:25

0:11:54

0:11:54

0:10:30

0:10:30

0:15:48

0:15:48

0:06:46

0:06:46

0:20:57

0:20:57

0:06:19

0:06:19

0:33:17

0:33:17

0:06:09

0:06:09

0:11:02

0:11:02