filmov

tv

Did Shader Model 3.0 Help the 6800 Ultra?

Показать описание

In this video we put Shader Model 3.0 support on the GeForce 6800 Ultra to the test, and see if it really made NVIDIA's highend 2004 offerings more "futureproof".

How to fix the game requires shader model 3 0

This Machine GPU does not support shader model 3, For all game 100% working

How to fix Farming Simulator 22 'Couldn't Init 3D System' Shader Model 3.0 is requrie...

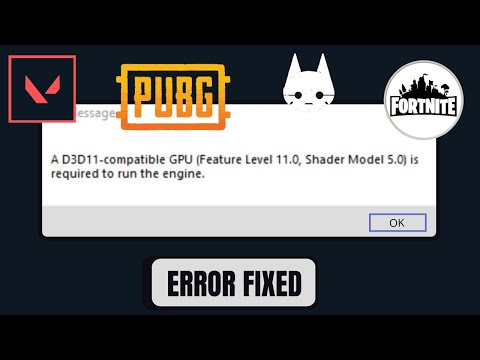

How To Fix Fortnite d3d11 Compatible GPU(Feature Level 11.0 Shader Model 5.0)

FARMİNG SİMULATOR 2019 3d system shader model 3 0 hatası çözümü TR

A d3d11-compatible gpu (feature level 11.0 shader model 5.0) is required to run the engine Valorant

A D3D11-compatible GPU (Feature Level 11.0, Shader Model 5.0) is required to run the engine - Fix ✅...

can someone tell me the problem why the shader is not working? #shorts #minecraft #subscribe

how to fix gta 4 fatal error shader model 3 0 or higher required

Fix Fatal Error in GTA 4 Shader Model 3.0 or Higher is required

Como resolver erro Shader Model 3.0 no FS 17 (15,19)

Como corrigir erro Shader Model 3.0

FIXED: A D3D11 compatible GPU (feature level 11.0 shader model 5.0) is required to run the engine

Tesla Model 3 Crush Test - BeamNG.drive

Como resolver o erro 'Shader Model 3' do Farming 2017

Run Oblivion on intel HD GPU with Shader 3.0 and HDR Mac/Windows

3 Hours vs. 3 Years of Blender

Как убрать ошибку Shader 3 0! GTA 4, paladins, warface и т д

Realistic Blender Car Animation CGI #blender3d #blenderrender #caranimation

A D3D11 compatible GPU Feature Level 11, Shader Model 5 0 is required to run the engine Fix ✅#howto...

FIXED: A D3D11 compatible GPU (feature level 11.0 shader model 5.0) is required to run the engine

Bully Requires a 32 bit Display and vertex and pixel shader model 3.0 support Finally Fix It.

HLSL Shader Model 6.6 | Greg Roth | Game Stack Live '21

How To Fix A D3D11 compatible GPU feature level 11.0 shader model 5.0 is required to run the engine

Комментарии

0:03:08

0:03:08

0:03:15

0:03:15

0:00:39

0:00:39

0:00:37

0:00:37

0:00:49

0:00:49

0:00:39

0:00:39

0:03:08

0:03:08

0:00:21

0:00:21

0:03:03

0:03:03

0:02:49

0:02:49

0:01:26

0:01:26

0:05:26

0:05:26

0:04:58

0:04:58

0:00:14

0:00:14

0:01:42

0:01:42

0:06:17

0:06:17

0:17:44

0:17:44

0:01:48

0:01:48

0:00:23

0:00:23

0:02:07

0:02:07

0:05:14

0:05:14

0:03:26

0:03:26

0:29:16

0:29:16

0:01:10

0:01:10