filmov

tv

'A Random Variable is NOT Random and NOT a Variable'

Показать описание

What is a random variable? Why do some people say "its not random and its not a variable"?

What is "expected value"? What is the difference between a random variable and a probability distribution? An example where you can do the problem two ways either with probability distributions or with pure random variables

Chapters:

0:00 Are random variables random?

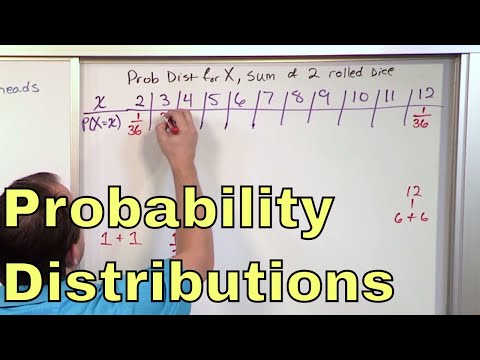

0:55 Example sum of two dice

2:40 A random variable is a collection of events

5:52 A random variables is a FUNCTION

8:49 Level sets of the function are events

10:12 How to use it as a variable

11:50 Definition of Expected Value

13:30 Linearity of Expectation

14:30 Probability Distribution vs A Random Variable

18:10 Two different formulas for the expected value

21:44 Expected value of binomial random variable example with two solutions

23:00 Solution 1 Probability Distribution Solution

25:34 Solution 2 Random Variables Only Solution

What is "expected value"? What is the difference between a random variable and a probability distribution? An example where you can do the problem two ways either with probability distributions or with pure random variables

Chapters:

0:00 Are random variables random?

0:55 Example sum of two dice

2:40 A random variable is a collection of events

5:52 A random variables is a FUNCTION

8:49 Level sets of the function are events

10:12 How to use it as a variable

11:50 Definition of Expected Value

13:30 Linearity of Expectation

14:30 Probability Distribution vs A Random Variable

18:10 Two different formulas for the expected value

21:44 Expected value of binomial random variable example with two solutions

23:00 Solution 1 Probability Distribution Solution

25:34 Solution 2 Random Variables Only Solution

'A Random Variable is NOT Random and NOT a Variable'

Why “probability of 0” does not mean “impossible” | Probabilities of probabilities, part 2

Poisson or Not? (When does a random variable have a Poisson distribution?)

02 - Random Variables and Discrete Probability Distributions

is an estimator a random variable why or why not

Random Variables and Probability Distributions

What is Random variable? Explain Random variable, Define Random variable, Meaning of Random variable

Which of the following is not a discrete random variable?

What is a Markov Process #probability #stochasticprocesses #markov

Which is not a discrete random variable?

Which is not a discrete random variable?

A random variable may be discrete or continuous, but not both.

Definition of Random Variable

A random variable may be discrete or continuous, but not both.

MA3355 | MA3391 | MA3303 |Probability and Random Variables | Problem 1|Binomial Distribution | Tamil

What is a Probability Density Function (pdf)? ('by far the best and easy to understand explanat...

Q-Q plot:How to test if a random variable is normally distributed or not?

Discrete Random Variable : How to find probability from a CDF.

Which is a not a discrete random variable?

Understanding 'Random Variable': An English Learning Guide

The sample mean is not a random variable when the population parameters are known.

PROBABILITY MASS FUNCTION (PMF) || PROBABILITY AND STATISTICS

Probability? It’s all made up

(Statistics Basics) Lecture 2: Random Variable and its Probability Distribution

Комментарии

0:29:04

0:29:04

0:10:01

0:10:01

0:14:40

0:14:40

0:29:54

0:29:54

0:00:53

0:00:53

0:04:39

0:04:39

0:03:29

0:03:29

0:00:33

0:00:33

0:05:55

0:05:55

0:00:31

0:00:31

0:00:31

0:00:31

0:00:31

0:00:31

0:05:49

0:05:49

0:00:31

0:00:31

0:08:55

0:08:55

0:09:46

0:09:46

0:22:24

0:22:24

0:05:36

0:05:36

0:00:31

0:00:31

0:02:39

0:02:39

0:00:31

0:00:31

0:12:18

0:12:18

0:00:25

0:00:25

0:17:09

0:17:09