filmov

tv

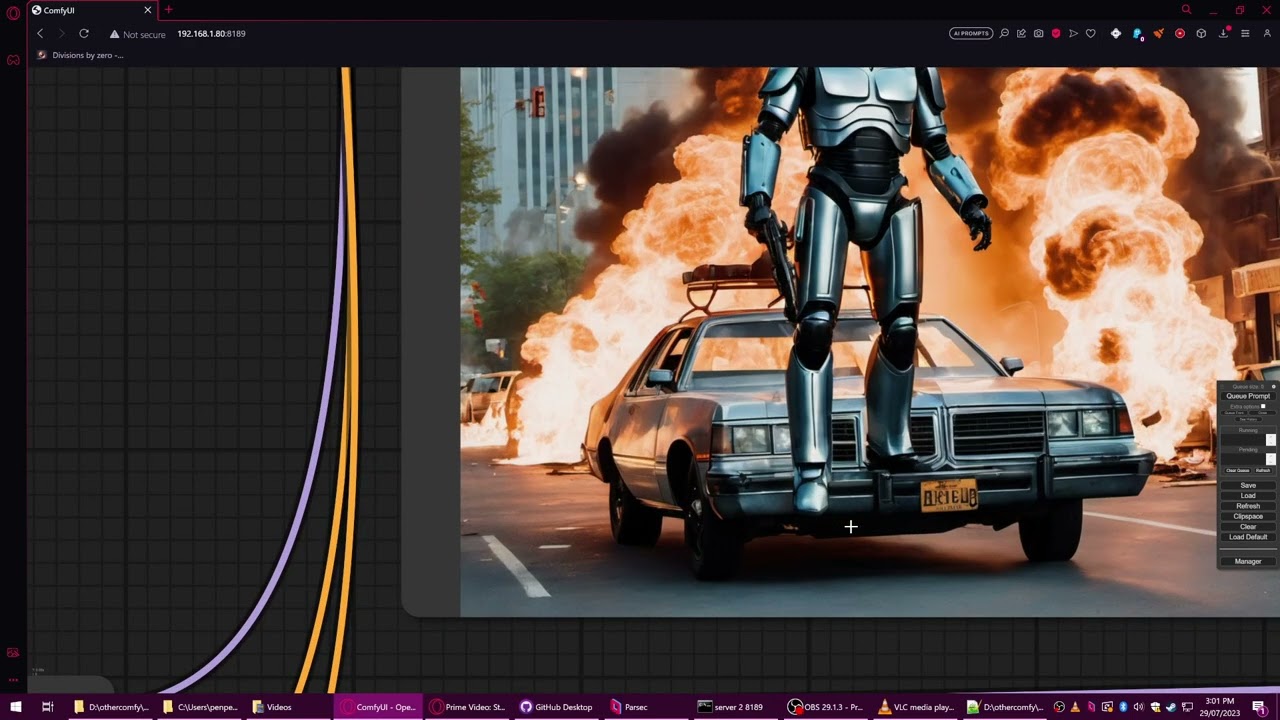

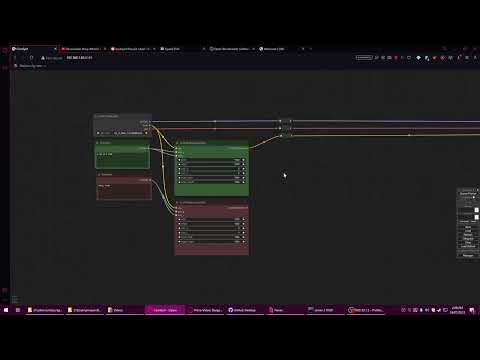

ComfyUI SDXL Basic Setup Part 3

Показать описание

A barebones basic way of setting up SDXL

Requires:

ComfyUI manager (for easiest way of finding custom nodes)

WAS suite

Derfuu's Math Nodes

Quality of life suite

Requires:

ComfyUI manager (for easiest way of finding custom nodes)

WAS suite

Derfuu's Math Nodes

Quality of life suite

ComfyUI SDXL Basic Setup Part 1

ComfyUI SDXL Basic Setup Part 2

SDXL ComfyUI Stability Workflow - What I use internally at Stability for my AI Art

ComfyUI SDXL Basic Setup Part 4 - upgrading your workflow

ComfyUI SDXL Basic Setup Part 3

Run SDXL Locally With ComfyUI (2024 Stable Diffusion Guide)

ComfyUI 01- Install and run SDXL is Super Easy

Beginner's Guide to Stable Diffusion and SDXL with COMFYUI

ComfyUI - Change Character Outfit with Magic Clothing

How to Install ComfyUI in 2023 - Ideal for SDXL!

ComfyUI SDXL Advanced Setup Part 5 - Adv dual sampler

L1: Using ComfyUI, EASY basics - Comfy Academy

ComfyUI Tutorial - How to Install ComfyUI on Windows, RunPod & Google Colab | Stable Diffusion S...

ComfyUI for Stable Diffusion Tutorial (Basics, SDXL & Refiner Workflows)

ComfyUI - Getting Started : Episode 1 - Better than AUTO1111 for Stable Diffusion AI Art generation

How to install and use ComfyUI - Stable Diffusion.

ComfyUI : NEW Official ControlNet Models are released! Here is my tutorial on how to use them.

ComfyUI - Getting Started : Episode 2 - Custom Nodes Everyone Should Have

ComfyUI Workflow Creation Essentials For Beginners

SDXL ComfyUI img2img - A simple workflow for image 2 image (img2img) with the SDXL diffusion model

How to Install SDXL Base 0.9 and Run with ComfyUi Easy Steps | Beginners Guide.

How To Install ComfyUI and Run SDXL on Low GPUs

The Easiest ComfyUI Workflow With Efficiency Nodes

NEW SDXL ComfyUI controlnet Installation and Workflow models

Комментарии

0:13:05

0:13:05

0:25:26

0:25:26

0:16:44

0:16:44

0:37:43

0:37:43

0:25:59

0:25:59

0:22:27

0:22:27

0:04:40

0:04:40

1:04:03

1:04:03

0:11:05

0:11:05

0:14:09

0:14:09

0:17:34

0:17:34

0:15:14

0:15:14

0:47:42

0:47:42

0:53:24

0:53:24

0:19:01

0:19:01

0:12:45

0:12:45

0:15:59

0:15:59

0:09:28

0:09:28

0:15:03

0:15:03

0:07:39

0:07:39

0:07:08

0:07:08

0:11:17

0:11:17

0:05:24

0:05:24

0:10:25

0:10:25