filmov

tv

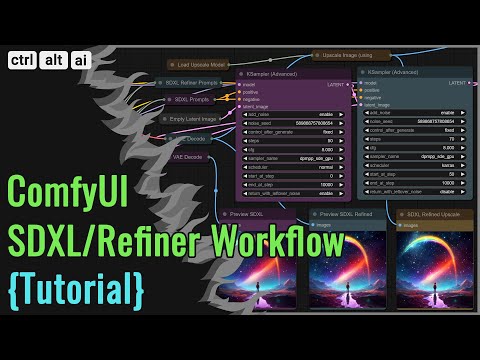

SDXL ComfyUI Stability Workflow - What I use internally at Stability for my AI Art

Показать описание

We will start with a basic workflow and then complicate it with a refinement pass, but then we will add in another special twist I am sure you will enjoy. #stablediffusion #sdxl #comfyui

Grab the SDXL model from here (OFFICIAL): (bonus LoRA also here)

The refiner is also available here (OFFICIAL):

Additional VAE (only needed if you plan to not use the built-in version)

SDXL ComfyUI Stability Workflow - What I use internally at Stability for my AI Art

Run SDXL Locally With ComfyUI (2024 Stable Diffusion Guide)

SDXL 1.0 ComfyUI Most Powerful Workflow With All-In-One Features For Free (AI Tutorial)

ComfyUI for Stable Diffusion Tutorial (Basics, SDXL & Refiner Workflows)

Beginner's Guide to Stable Diffusion and SDXL with COMFYUI

SDXL Best Workflow in ComfyUI

Improve Speed and Performance in ComfyUI and Stable Diffusion with LOWVRAM and Workflow Management

SDXL Base + Refiner workflow using ComfyUI | AI art generator

Blazing Fast AI Generations with SDXL Turbo + Local Live painting

Stable Diffusion is FINISHED! How to Run Flux.1 on ComfyUI

SDXL ComfyUI img2img - A simple workflow for image 2 image (img2img) with the SDXL diffusion model

ComfyUI 02 - SDXL Refiner Workflow

ComfyUI : NEW Official ControlNet Models are released! Here is my tutorial on how to use them.

0 to 100 | Stable Diffusion SDXL & ComfyUI - An In-Depth Workflow Tutorial from Scratch

How To Install ComfyUI and Run SDXL on Low GPUs

SDXL - BEST Build + Upscaler + Steps Guide

New Model! SDXL Turbo - 1 Step Real Time Stable Diffusion in ComfyUI

ControlNet Tutorial for ComfyUI.

NEW SDXL ComfyUI controlnet Installation and Workflow models

ComfyUI : Ultimate Upscaler - Upscale any image from Stable Diffusion, MidJourney, or photo!

SDXL ControlNet Tutorial for ComfyUI plus FREE Workflows!

ComfyUI - SUPER FAST Images in 4 steps or 0.7 seconds! On ANY stable diffusion model or LoRA

How To Use ComfyUI img2img Workflow With SDXL 1.0 In Google Colab(AI Tutorial)

Stable Diffusion AnimateDiff For SDXL Released Beta! Here Is What You Need (Tutorial Guide)

Комментарии

0:16:44

0:16:44

0:22:27

0:22:27

0:12:11

0:12:11

0:53:24

0:53:24

1:04:03

1:04:03

0:21:15

0:21:15

0:09:05

0:09:05

0:42:42

0:42:42

0:14:42

0:14:42

0:10:28

0:10:28

0:07:39

0:07:39

0:09:53

0:09:53

0:15:59

0:15:59

0:25:07

0:25:07

0:11:17

0:11:17

0:12:16

0:12:16

0:06:27

0:06:27

0:17:57

0:17:57

0:10:25

0:10:25

0:09:03

0:09:03

0:09:46

0:09:46

0:06:53

0:06:53

0:03:58

0:03:58

0:08:32

0:08:32