filmov

tv

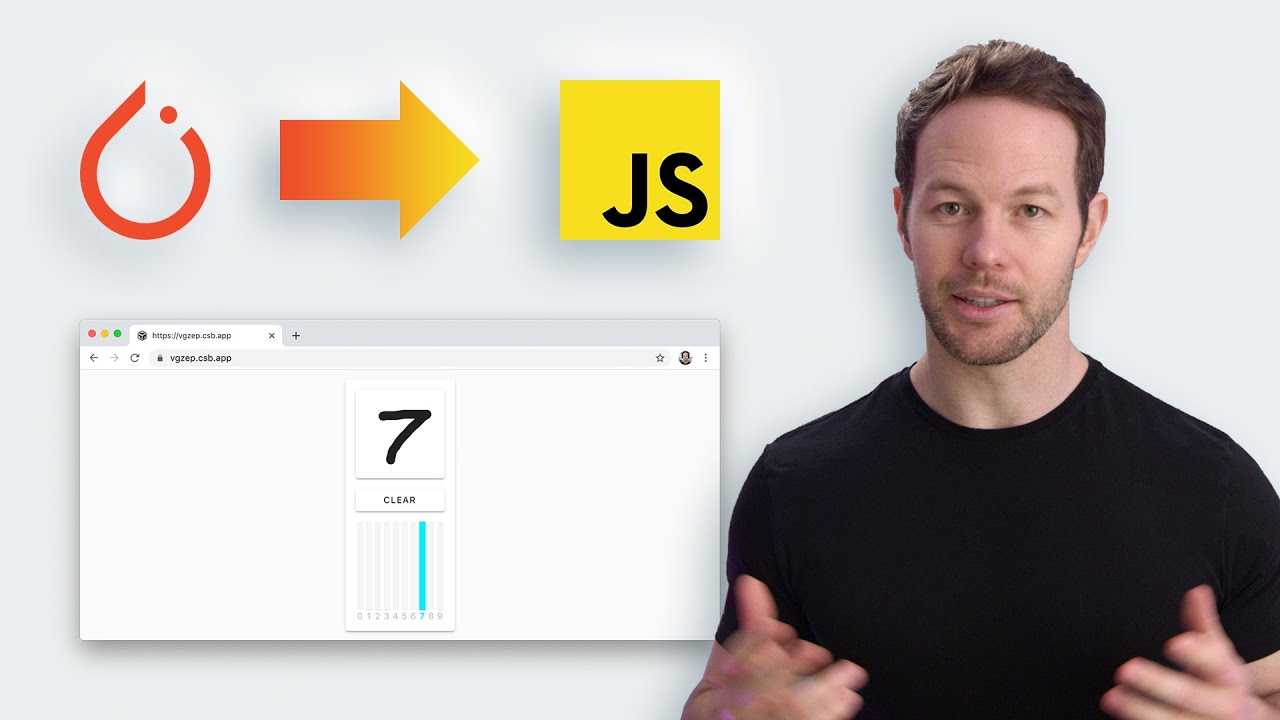

How to Run PyTorch Models in the Browser With ONNX.js

Показать описание

Run PyTorch models in the browser with JavaScript by first converting your PyTorch model into the ONNX format and then loading that ONNX model into your website or app using ONNX.js. In this video, I take you through this process by building a handwritten digit recognizer that runs in the browser.

Live demo:

The demo sandbox code:

The GitHub repo:

I opened these issues for the bugs mentioned in the video if you want to track their status:

The benefits of running a model in the browser:

• Faster inference times with smaller models.

• Easy to host and scale (only static files).

• Offline support.

• User privacy (can keep the data on the device).

The benefits of using a backend server:

• Faster load times (don't have to download the model).

• Faster and consistent inference times with larger models (can take advantage of GPUs or other accelerators).

• Model privacy (don't have to share your model if you want to keep it private).

Join our Discord community:

Connect with me:

🎵 Kazukii - Return

Live demo:

The demo sandbox code:

The GitHub repo:

I opened these issues for the bugs mentioned in the video if you want to track their status:

The benefits of running a model in the browser:

• Faster inference times with smaller models.

• Easy to host and scale (only static files).

• Offline support.

• User privacy (can keep the data on the device).

The benefits of using a backend server:

• Faster load times (don't have to download the model).

• Faster and consistent inference times with larger models (can take advantage of GPUs or other accelerators).

• Model privacy (don't have to share your model if you want to keep it private).

Join our Discord community:

Connect with me:

🎵 Kazukii - Return

Комментарии

0:02:43

0:02:43

0:22:22

0:22:22

0:03:34

0:03:34

5:46:05

5:46:05

0:18:07

0:18:07

0:09:11

0:09:11

0:18:24

0:18:24

0:19:17

0:19:17

0:25:32

0:25:32

1:37:26

1:37:26

0:30:54

0:30:54

0:02:12

0:02:12

0:14:49

0:14:49

0:41:52

0:41:52

0:14:11

0:14:11

0:14:57

0:14:57

0:02:39

0:02:39

0:10:45

0:10:45

0:03:19

0:03:19

0:57:10

0:57:10

0:28:02

0:28:02

0:00:47

0:00:47

0:19:23

0:19:23

0:18:45

0:18:45