filmov

tv

MAMBA AI (S6): Better than Transformers?

Показать описание

MAMBA (S6) stands for a simplified neural network architecture that integrates selective state space models (SSMs) for sequence modelling. It's designed to be a more efficient and powerful alternative to Transformer models (like current LLMs, VLMs, ..) , particularly for long sequences. It is an evolution on classical S4 models.

By making the SSM parameters input-dependent, MAMBA can selectively focus on relevant information in a sequence, enhancing its modelling capability.

Does it have the potential to disrupt the transformer architecture, that almost all AI systems currently are based upon?

#aieducation

#insights

#newtechnology

By making the SSM parameters input-dependent, MAMBA can selectively focus on relevant information in a sequence, enhancing its modelling capability.

Does it have the potential to disrupt the transformer architecture, that almost all AI systems currently are based upon?

#aieducation

#insights

#newtechnology

MAMBA AI (S6): Better than Transformers?

Mamba Might Just Make LLMs 1000x Cheaper...

Mamba: Linear-Time Sequence Modeling with Selective State Spaces (Paper Explained)

The Largest Mamba LLM Experiment Just Dropped

Mamba vs. Transformers: The Future of LLMs? | Paper Overview & Google Colab Code & Mamba Cha...

BEYOND MAMBA AI (S6): Vector FIELDS

Mamba, SSMs & S4s Explained in 16 Minutes

Mamba - a replacement for Transformers?

Deep dive into how Mamba works - Linear-Time Sequence Modeling with SSMs - Arxiv Dives

JAMBA MoE: Open Source MAMBA w/ Transformer: CODE

How to Fine-Tune Mamba on Your Data

MAMBA LLM for Personalized Medicine?

Mamba architecture intuition | Shawn's ML Notes

Mamba-Palooza: 90 Days of Mamba-Inspired Research with Jason Meaux: Part 1

Enfin une mémoire à long terme pour l’IA : MAMBA, SSM, S4, S6 & Transformers

MambaByte: Token-Free Language Modeling

Mamba sequence model - part 1

Webinar on Mamba vs Transformer

Mamba part 2 - Can it replace Transformers?

Mamba part 4 - System Details and Implementation

DJI T40 - I just wants to land, let me land #t40 #dji

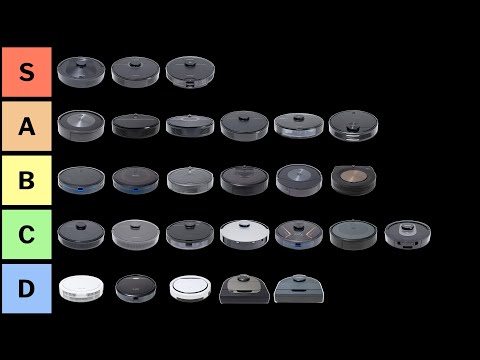

The Best Robot Vacuum Tier List

Back to FreeFire🔥with Samsung Galaxy S23 Ultra❤️

The State Space Model Revolution, with Albert Gu

Комментарии

0:45:48

0:45:48

0:14:06

0:14:06

0:40:40

0:40:40

0:09:46

0:09:46

0:20:47

0:20:47

0:57:28

0:57:28

0:16:20

0:16:20

0:16:01

0:16:01

0:44:23

0:44:23

0:09:16

0:09:16

0:50:02

0:50:02

0:17:14

0:17:14

0:27:48

0:27:48

1:16:52

1:16:52

0:35:24

0:35:24

0:16:26

0:16:26

1:20:59

1:20:59

1:19:44

1:19:44

1:24:54

1:24:54

1:26:44

1:26:44

0:00:36

0:00:36

0:13:08

0:13:08

0:00:28

0:00:28

1:42:16

1:42:16