filmov

tv

Not enough data for deep learning? Try this with your #Python code #shorts

Показать описание

Data augmentation can be a quick way to generate new data for deep learning.

Oh, and don't forget to connect with me!

Happy coding!

Nick

P.s. Let me know how you go and drop a comment if you need a hand!

Oh, and don't forget to connect with me!

Happy coding!

Nick

P.s. Let me know how you go and drop a comment if you need a hand!

Not enough data for deep learning? Try this with your #Python code #shorts

Deep Learning With Just a Little Data

NLP Deep Dive: How much data do you ACTUALLY need for an NLP project?

Starting deep learning projects without enough labelled data|Sergei Smirnov| DSC Europe 2022

Tabular Data: Deep Learning is Not All You Need, Dr. Ravid Shwartz-Ziv

A2 8 Ateret Anaby Tavor, Do not have enough data? Deep learning for the rescue!

Kagi: Is Paying for a Search Engine Worth It?

CuttingEEG2021 Alexandre Gramfort. Boosting EEG data analysis with deep learning.

A Resource Efficient Distributed Deep Learning Method without Sensitive Data Sharing | MIT

19FORCE Colwell Quantifying Data Needs for Deep Feed forward Neural Network Application in Reservoir

Sam Altman Stunned As Company 'LEAKS' GPT-5 Details Early...

Data Augmentation (Deep Learning vs Machine Learning) | A Short Guide

If you're struggling to learn to code, you must watch this

Training data for deep learning: what is needed? - Ben Glocker

Codeless Deep Learning for Sequential Data

Pytorch Data Augmentation for CNNs: Pytorch Deep Learning Tutorial

Break into Deep Learning for Image Data without Code

Ben Nachman: Extracting the most from collider data with deep learning

Why Scale Images in Deep Learning | Data Science Interview Questions | Machine Learning

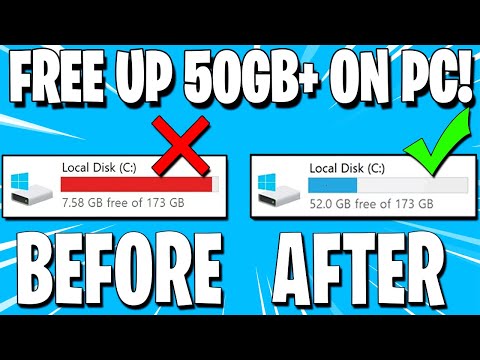

How to FREE Up Disk Space on Windows 10, 8 or 7! 🖥️ More than 50GB+!

The Importance Of Training Data In Deep Learning

MACHINE LEARNING algorithms a junior data scientist should learn (Deep Learning is not on the list)

AI BOMBSHELLS: Tesla Q3 AI Deep Dive

Deep Reinforcement Learning with Real-World Data

Комментарии

0:00:55

0:00:55

0:24:13

0:24:13

0:31:36

0:31:36

0:30:04

0:30:04

0:11:14

0:11:14

0:27:11

0:27:11

0:08:06

0:08:06

0:42:37

0:42:37

0:42:07

0:42:07

0:21:37

0:21:37

0:15:02

0:15:02

0:06:51

0:06:51

0:02:21

0:02:21

0:15:02

0:15:02

0:54:12

0:54:12

0:39:21

0:39:21

1:01:45

1:01:45

0:51:14

0:51:14

0:00:58

0:00:58

0:04:04

0:04:04

0:00:25

0:00:25

0:10:06

0:10:06

0:31:51

0:31:51

0:29:19

0:29:19