filmov

tv

Open Problems in Mechanistic Interpretability: A Whirlwind Tour

Показать описание

A Google TechTalk, presented by Neel Nanda, 2023/06/20

Google Algorithms Seminar - ABSTRACT: Mechanistic Interpretability is the study of reverse engineering the learned algorithms in a trained neural network, in the hopes of applying this understanding to make powerful systems safer and more steerable. In this talk Neel will give an overview of the field, summarise some key works, and outline what he sees as the most promising areas of future work and open problems. This will touch on techniques in casual abstraction and meditation analysis, understanding superposition and distributed representations, model editing, and studying individual circuits and neurons.

About the Speaker: Neel works on the mechanistic interpretability team at Google DeepMind. He previously worked with Chris Olah at Anthropic on the transformer circuits agenda, and has done independent work on reverse-engineering modular addition and using this to understand grokking.

Google Algorithms Seminar - ABSTRACT: Mechanistic Interpretability is the study of reverse engineering the learned algorithms in a trained neural network, in the hopes of applying this understanding to make powerful systems safer and more steerable. In this talk Neel will give an overview of the field, summarise some key works, and outline what he sees as the most promising areas of future work and open problems. This will touch on techniques in casual abstraction and meditation analysis, understanding superposition and distributed representations, model editing, and studying individual circuits and neurons.

About the Speaker: Neel works on the mechanistic interpretability team at Google DeepMind. He previously worked with Chris Olah at Anthropic on the transformer circuits agenda, and has done independent work on reverse-engineering modular addition and using this to understand grokking.

Open Problems in Mechanistic Interpretability: A Whirlwind Tour

Open Problems in Mechanistic Interpretability: A Whirlwind Tour | Neel Nanda | EAGxVirtual 2023

Concrete Open Problems in Mechanistic Interpretability: Neel Nanda at SERI MATS

Concrete open problems in mechanistic interpretability | Neel Nanda | EAG London 23

Neel Nanda on Avoiding an AI Catastrophe with Mechanistic Interpretability

Neel Nanda: Mechanistic Interpretability & Mathematics

Neel Nanda on mechanistic interpretability #artificialintelligence #gpt

Mechanistic Interpretability - Stella Biderman | Stanford MLSys #70

A Walkthrough of Progress Measures for Grokking via Mechanistic Interpretability: Why? (Part 3/3)

A Walkthrough of Progress Measures for Grokking via Mechanistic Interpretability: How? (Part 2/3)

What is mechanistic interpretability? Neel Nanda explains.

The Surprising World of Mechanistic Interpretability Unlocking AI Secrets

What Do Neural Networks Really Learn? Exploring the Brain of an AI Model

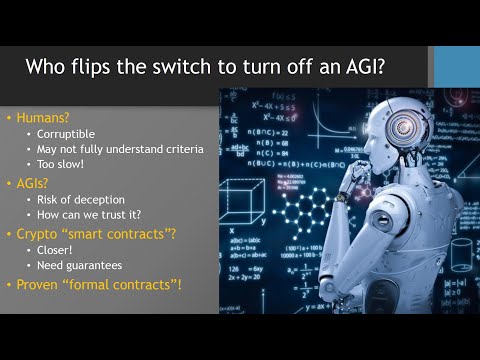

Provably Safe AGI - MIT Mechanistic Interpretability Conference - May 7, 2023

Max Tegmark to Keeping AI under control through mechanistic interpretability

Mechanistic Interpretability: The Key to Truly Transparent AI Models

Will thermodynamics be useful in mechanistic Interpretability?

0L - Theory [rough early thoughts]

Mechanistic Interpretability — The Most Accessible Way to Save the World?

Unleashing the Power of Physicists Keeping AI in Check with Mechanistic Interpretability

Interpretability Hackathon 2.0 Keynote - Neel Nanda

Chris Olah - Looking Inside Neural Networks with Mechanistic Interpretability

Demystifying LLMs with Mechanistic Interpretability Researcher Arthur Conmy

A Whirlwind Tour of Mechanistic Interpretability - Neel Nanda

Комментарии

0:55:27

0:55:27

0:51:03

0:51:03

1:26:48

1:26:48

0:52:40

0:52:40

1:01:40

1:01:40

0:56:33

0:56:33

0:00:59

0:00:59

0:55:27

0:55:27

0:46:08

0:46:08

0:40:08

0:40:08

0:03:13

0:03:13

0:00:21

0:00:21

0:17:35

0:17:35

0:15:30

0:15:30

0:00:59

0:00:59

0:07:43

0:07:43

0:01:01

0:01:01

0:03:02

0:03:02

0:20:48

0:20:48

0:00:27

0:00:27

1:07:55

1:07:55

0:40:59

0:40:59

2:13:07

2:13:07

1:44:38

1:44:38