filmov

tv

LLM Chat App in Python w/ Ollama-py and Streamlit

Показать описание

In this video I walk through the new Ollama Python library, and use it to build a chat app with UI powered by Streamlit. After reviewing some important methods from this library, I touch on Python generators as we construct our chat app, step by step.

Links:

Timestamps:

00:00 - Intro

00:26 - Why not use the CLI?

01:17 - Looking at the ollama-py library

02:26 - Setting up Python environment

04:05 - Reviewing Ollama functions

04:14 - list()

04:52 - show()

05:44 - chat()

06:55 - Looking at Streamlit

07:59 - Start writing our app

08:51 - App: user input

11:16 - App: message history

13:09 - App: adding ollama response

15:00 - App: chooing a model

17:07 - Introducing generators

18:52 - App: streaming responses

21:22 - App: review

22:10 - Where to find the code

22:27 - Thank you for 2k

Links:

Timestamps:

00:00 - Intro

00:26 - Why not use the CLI?

01:17 - Looking at the ollama-py library

02:26 - Setting up Python environment

04:05 - Reviewing Ollama functions

04:14 - list()

04:52 - show()

05:44 - chat()

06:55 - Looking at Streamlit

07:59 - Start writing our app

08:51 - App: user input

11:16 - App: message history

13:09 - App: adding ollama response

15:00 - App: chooing a model

17:07 - Introducing generators

18:52 - App: streaming responses

21:22 - App: review

22:10 - Where to find the code

22:27 - Thank you for 2k

LLM Chat App in Python w/ Ollama-py and Streamlit

How to use the Llama 2 LLM in Python

Chat with Multiple PDFs | LangChain App Tutorial in Python (Free LLMs and Embeddings)

End To End LLM Conversational Q&A Chatbot With Deployment

Build Python LLM apps in minutes Using Chainlit ⚡️

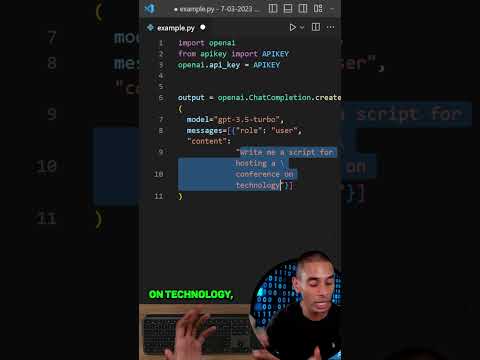

ChatGPT in Python for Beginners - Build A Chatbot

Create a LOCAL Python AI Chatbot In Minutes Using Ollama

Chainlit CrashCourse - Build LLM ChatBot with Chainlit and Python & GPT

LLM Function Calling (Tool Use) with Llama 3 | Tool Choice, Argument Mapping, Groq Llama 3 Tool Use

Build Chat Apps with Streamlit Chat Elements and GPT4All (LLM Apps)

Build LLM app with Streamlit and Ollama Python

Using ChatGPT with YOUR OWN Data. This is magical. (LangChain OpenAI API)

Build & Integrate your own custom chatbot to a website (Python & JavaScript)

Intelligent AI Chatbot in Python

2-Langchain Series-Building Chatbot Using Paid And Open Source LLM's using Langchain And Ollama

Build the fastest AI Chatbot using Groq Chat: Insane LLM Speed 🔥

How To Use Llama LLM in Python Locally

Langchain: PDF Chat App (GUI) | ChatGPT for Your PDF FILES | Step-by-Step Tutorial

Langchain PDF App (GUI) | Create a ChatGPT For Your PDF in Python

Build your own LLM chatbot from scratch | End to End Gen AI | End to End LLM | Mistrak 7B LLM

Building a LLM Chatbot using Google's Gemini Pro with Streamlit Python | Gen AI #geminipro #cha...

Build a multi-LLM chat application with Azure Container Apps

How to use the ChatGPT API with Python!!

Chat with a CSV | LangChain Agents Tutorial (Beginners)

Комментарии

0:22:28

0:22:28

0:04:51

0:04:51

1:07:30

1:07:30

0:18:31

0:18:31

0:13:51

0:13:51

0:14:25

0:14:25

0:13:17

0:13:17

0:29:43

0:29:43

0:27:26

0:27:26

0:21:57

0:21:57

0:15:34

0:15:34

0:16:29

0:16:29

0:29:52

0:29:52

0:35:42

0:35:42

0:27:01

0:27:01

0:22:09

0:22:09

0:11:07

0:11:07

0:46:23

0:46:23

0:39:54

0:39:54

0:44:49

0:44:49

0:24:41

0:24:41

0:15:27

0:15:27

0:00:49

0:00:49

0:26:22

0:26:22