filmov

tv

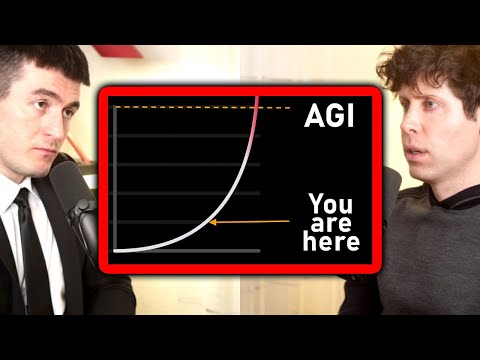

AGI (General AI) - the Last Invention & Sooner Than Expected?

Показать описание

Hello Beyonders!

AGI has gained mainstream popularity in recent years thanks to advancements in AI. There are critics, supporters and doubters of this concept. We cover the basic landscape of AGI and what the trajectory may be.

Looking forward to your comments.

Chapters:

00:00 Introduction

01:24 Supervision of AGI

03:10 The Advocates

03:40 Project Attempts

06:07 Singularity Skeptics

Follow us 😊

#AGI #ArtificialGeneralIntelligence #SuperAI #GeneralAI

AGI has gained mainstream popularity in recent years thanks to advancements in AI. There are critics, supporters and doubters of this concept. We cover the basic landscape of AGI and what the trajectory may be.

Looking forward to your comments.

Chapters:

00:00 Introduction

01:24 Supervision of AGI

03:10 The Advocates

03:40 Project Attempts

06:07 Singularity Skeptics

Follow us 😊

#AGI #ArtificialGeneralIntelligence #SuperAI #GeneralAI

Artificial General Intelligence (AGI) Simply Explained

The Exciting, Perilous Journey Toward AGI | Ilya Sutskever | TED

OpenAI CEO: When will AGI arrive? | Sam Altman and Lex Fridman

8 Use Cases for Artificial General Intelligence (AGI)

How Sam Altman Defines AGI

AGI (General AI) - the Last Invention & Sooner Than Expected?

AI Won't Be AGI, Until It Can At Least Do This (plus 6 key ways LLMs are being upgraded)

What Will Happen When We Reach The Level of AGI? (7 Shocking Things)

Do you know about advanced AI #ai #science

Is the Intelligence-Explosion Near? A Reality Check.

We’re Close to AGI | Artificial General Intelligence DOCUMENTARY #agi #artificialintelligence #ai

Zuckerberg's BOMBSHELL Interview: (AGI + AI AGENTS)

Ben Goertzel: AGI, SingularityNET and Decentralized AI

AGI Is Already Achieved SECRETLY | AI for Everyone Episode 2

Introducing AIRIS – The World’s First Self-Learning Proto AGI

The Last 6 Decades of AI — and What Comes Next | Ray Kurzweil | TED

This is the dangerous AI that got Sam Altman fired. Elon Musk, Ilya Sutskever.

Deadly Truth of General AI? - Computerphile

Artificial General Intelligence (AGI) | Difference Between AI And AGI | AGI Explained | Simplilearn

Googles AI CEO Just Revealed AGI Details...

OpenAI's NEW 'AGI Robot' STUNS The ENITRE INDUSTRY (Figure 01 Breakthrough)

Mark Zuckerberg's timeline for AGI: When will it arrive? | Lex Fridman Podcast Clips

Degenerative AI… The recent failures of 'artificial intelligence' tech

AGI in 7 Months! Gemini, Sora, Optimus, & Agents - It's about to get REAL WEIRD out there!

Комментарии

0:13:27

0:13:27

0:12:25

0:12:25

0:04:37

0:04:37

0:09:04

0:09:04

0:00:33

0:00:33

0:08:10

0:08:10

0:32:34

0:32:34

0:10:43

0:10:43

0:00:40

0:00:40

0:10:19

0:10:19

0:19:11

0:19:11

0:31:42

0:31:42

0:56:30

0:56:30

0:19:29

0:19:29

0:08:51

0:08:51

0:13:12

0:13:12

0:16:09

0:16:09

0:08:30

0:08:30

0:09:51

0:09:51

0:15:49

0:15:49

0:19:50

0:19:50

0:07:45

0:07:45

0:05:25

0:05:25

0:34:38

0:34:38