filmov

tv

Introduction to Numerical Optimization Gradient Descent - 1

Показать описание

Lecture 20

Intro to Gradient Descent || Optimizing High-Dimensional Equations

Gradient Descent in 3 minutes

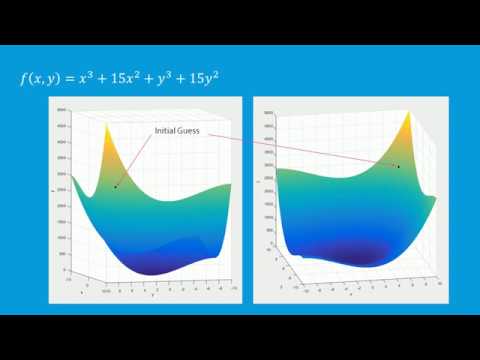

Introduction to Numerical Optimization Gradient Descent - 1

Introduction To Optimization: Gradient Based Algorithms

Visually Explained: Newton's Method in Optimization

Introduction To Optimization: Gradient Free Algorithms (1/2) - Genetic - Particle Swarm

Numerical Optimization - Gradient Descent

Gradient Descent Explained

Mastering Neural Networks and Deep Learning: Build, Train, and Optimize AI Models

Gradient Descent, Step-by-Step

Introduction to Numerical Optimization

Optimization Basics

Follow the gradient: an introduction to mathematical optimisation - Gianluca Campanella

Numerical Optimization Algorithms: Gradient Descent

Introduction to Optimization . Part 3 - Gradient-Based Optimization

What Is Mathematical Optimization?

Applied Optimization - Gradients

Optimization in Machine Learning - First order methods - Gradient descent

Introduction to Optimization . Part 5 - Gradient-Based Algorithms

Gradient descent simple explanation|gradient descent machine learning|gradient descent algorithm

From Bioinformatics to AI: 9. Gradient-based Numerical Optimization

Gradient based optimisation

Numerical Optimization

EuroSciPy 2023 - Introduction to numerical optimization

Комментарии

0:11:04

0:11:04

0:03:06

0:03:06

0:22:54

0:22:54

0:05:27

0:05:27

0:11:26

0:11:26

0:05:25

0:05:25

0:06:56

0:06:56

0:07:05

0:07:05

2:17:41

2:17:41

0:23:54

0:23:54

0:08:08

0:08:08

0:08:05

0:08:05

1:27:37

1:27:37

0:38:11

0:38:11

0:27:39

0:27:39

0:11:35

0:11:35

0:16:58

0:16:58

0:17:13

0:17:13

0:30:22

0:30:22

0:15:39

0:15:39

0:59:33

0:59:33

0:27:48

0:27:48

0:00:23

0:00:23

1:35:55

1:35:55