filmov

tv

Neural Networks Expressivity through the lens of Dynamical Systems

Показать описание

Title: Neural Networks Expressivity through the lens of Dynamical Systems

Speaker: Vaggos Chatziafratis (UC Santa Cruz)

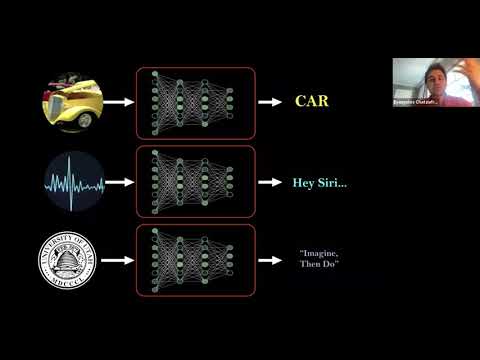

Abstract. Given a target function f, how large must a neural network be in order to approximate f? Understanding the representational power of Deep Neural Networks (DNNs) and how their structural properties (e.g., depth, width, type of activation unit) affect the functions they can compute, has been an important yet challenging question in approximation theory and deep learning even in the early days of AI.

In this talk, I want to tell you about some recent progress on this topic that uses ideas from dynamical systems. The main results are exponential depth-width trade-offs for DNNs representing certain families of functions. Our techniques rely on a generalized notion of fixed points, called periodic points that have played a major role in chaos theory (Li-Yorke chaos and Sharkovsky's theorem).

Speaker: Vaggos Chatziafratis (UC Santa Cruz)

Abstract. Given a target function f, how large must a neural network be in order to approximate f? Understanding the representational power of Deep Neural Networks (DNNs) and how their structural properties (e.g., depth, width, type of activation unit) affect the functions they can compute, has been an important yet challenging question in approximation theory and deep learning even in the early days of AI.

In this talk, I want to tell you about some recent progress on this topic that uses ideas from dynamical systems. The main results are exponential depth-width trade-offs for DNNs representing certain families of functions. Our techniques rely on a generalized notion of fixed points, called periodic points that have played a major role in chaos theory (Li-Yorke chaos and Sharkovsky's theorem).

0:58:28

0:58:28

0:28:44

0:28:44

0:01:04

0:01:04

0:00:28

0:00:28

0:03:25

0:03:25

0:02:33

0:02:33

0:54:59

0:54:59

1:00:53

1:00:53

0:08:31

0:08:31

0:28:44

0:28:44

0:07:16

0:07:16

0:58:05

0:58:05

0:01:56

0:01:56

0:51:24

0:51:24

0:19:14

0:19:14

2:25:52

2:25:52

0:01:20

0:01:20

![[AUTOML23] “No Free](https://i.ytimg.com/vi/6or_Jmq7spw/hqdefault.jpg) 0:02:01

0:02:01

0:24:40

0:24:40

0:03:30

0:03:30

0:05:00

0:05:00

0:05:04

0:05:04

0:07:01

0:07:01