filmov

tv

Linear Regression in 12 minutes

Показать описание

Linear Regression and Ordinary Least Squared ('OLS') are ancient and yet still useful modeling principles. In this video, I introduce these ideas from the typical machine learning perspective - the loss surface. At the end, I explain how basis expansions push this idea into a flexible and diverse modeling world.

SOCIAL MEDIA

Sources and Learning More

Over the years, I've learned and re-learned these ideas from many sources, which means there wasn't any primary sources I reference when writing. Nonetheless, I confirmed my definitions with the wikipedia articles [1][2] and chapter 5 of [3] is an informative discussion of basis expansions.

[3] Hastie, T., Tibshirani, R., & Friedman, J. H. (2009). The Elements of Statistical Learning: Data Mining, Inference, and Prediction. 2nd ed. New York: Springer.

Linear Regression in 12 minutes

Linear Regression in 2 minutes

How To... Perform Simple Linear Regression by Hand

Linear Regression Simply Explained | 5 minutes

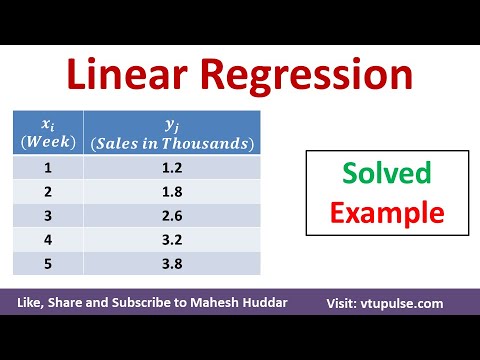

Linear Regression Algorithm – Solved Numerical Example in Machine Learning by Mahesh Huddar

Linear Regression in 7 Minutes

Linear Regression - made easy

[12-min poster] A Bayesian Multivariate Linear Regression on the Effects of Wealth on Well-Being

Revision | Weeks 11 and 12

Lec-4: Linear Regression📈 with Real life examples & Calculations | Easiest Explanation

Linear Regression in 10 Minutes!

Video 1: Introduction to Simple Linear Regression

Correlation and Coefficient of Determination in 3 Minutes

Unbelievable: Learn Linear Regression in Under a Minute! #shorts #ytshorts #viral

Linear Regression Explained in Hindi ll Machine Learning Course

What is Simple Linear Regression in Statistics | Linear Regression Using Least Squares Method

Linear Regression in R under 5 minutes

Simple Linear Regression, hypothesis tests

Simple Linear Regression in Python - sklearn

All Machine Learning Models Explained in 5 Minutes | Types of ML Models Basics

10. Logistic Regression In 12 Minutes Only

Trying transition video for the first time 💙😂 || #transformation #transition #shorts #viralvideo...

Linear Regression Solved Numerical Part-1 Explained in Hindi l Machine Learning Course

700% Profit In ONE Trade Using A Secret Indicator 🤯 #shorts

Комментарии

0:12:01

0:12:01

0:02:34

0:02:34

0:10:55

0:10:55

0:05:08

0:05:08

0:05:30

0:05:30

0:07:29

0:07:29

0:11:11

0:11:11

![[12-min poster] A](https://i.ytimg.com/vi/m84WsLo7c6k/hqdefault.jpg) 0:13:24

0:13:24

2:44:30

2:44:30

0:11:01

0:11:01

0:10:01

0:10:01

0:13:29

0:13:29

0:03:36

0:03:36

0:01:00

0:01:00

0:14:20

0:14:20

0:13:04

0:13:04

0:04:30

0:04:30

0:12:00

0:12:00

0:12:48

0:12:48

0:05:01

0:05:01

0:12:25

0:12:25

0:00:15

0:00:15

0:06:56

0:06:56

0:00:22

0:00:22