filmov

tv

Apache Spark - Pandas On Spark | Spark Performance Tuning | Spark Optimization Technique

Показать описание

#apachespark #sparktutorial #pandasonspark

Apache Spark - Pandas On Spark | Spark Performance Tuning | Spark Optimization Technique

In this video, we will learn about the new feature of Pandas on Spark that was released in Spark 3.2.0 version. We will also have a small demo to understand the Spark Performance tuning and Spark Performance improvement while using Pandas on Spark over native pandas library.

Blog on Pandas API on Spark:

==================================

Blog link to learn more on Spark:

Linkedin profile:

FB page:

#pyspark

#apachespark

#azure

#databricks

#dataengineering

#sparkwork

#interview

pyspark interview questions and answers

Apache Spark - Pandas On Spark | Spark Performance Tuning | Spark Optimization Technique

In this video, we will learn about the new feature of Pandas on Spark that was released in Spark 3.2.0 version. We will also have a small demo to understand the Spark Performance tuning and Spark Performance improvement while using Pandas on Spark over native pandas library.

Blog on Pandas API on Spark:

==================================

Blog link to learn more on Spark:

Linkedin profile:

FB page:

#pyspark

#apachespark

#azure

#databricks

#dataengineering

#sparkwork

#interview

pyspark interview questions and answers

Spark Dataframe or Pandas Dataframe - When to use Pandas Dataframe vs Spark Dataframe

Master Databricks and Apache Spark Step by Step: Lesson 33 - Goodbye Koalas: Hello Pandas on Spark!

Which is best ? | Spark vs Pandas

Apache Spark - Pandas On Spark | Spark Performance Tuning | Spark Optimization Technique

PySpark Tutorial

Koalas: Pandas on Apache Spark

Master Databricks and Apache Spark Step by Step: Lesson 32 - Koalas: Pandas on Spark!

Koalas: Pandas on Apache Spark -Tim Hunter, Brooke Wenig, Niall Turbitt (Databricks)

Introduction to Data Science Tools and Software | AIML End-to-End Session 34

How to use Pandas API on Spark 3.3.0 | Pandas API on Spark Tutorial

Koalas: Making an Easy Transition from Pandas to Apache Spark

The BEST library for building Data Pipelines...

Learn Apache Spark in 10 Minutes | Step by Step Guide

Master Databricks and Apache Spark Step by Step: Lesson 27 - PySpark: Coding pandas UDFs

Pandas API on Spark

Koalas on Apache Spark - Pandas API

Koalas: pandas APIs on Apache Spark

What Is Apache Spark?

Accelerated Data Science: Announcing GPU-acceleration for pandas, NetworkX, and Apache Spark MLlib

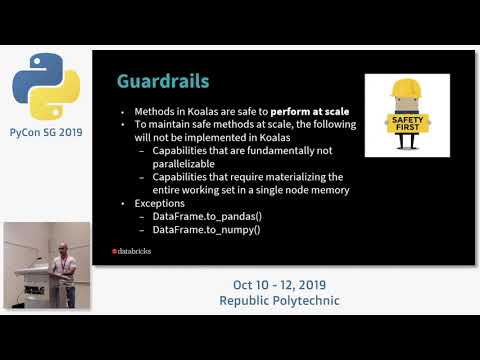

Koalas: Pandas API on Apache Spark - PyCon SG 2019

Pandas-on-Spark vs PySpark DataFrames #Shorts

Ibis: Seamless Transition Between Pandas and Apache Spark

Pandas vs Pyspark speed test !!

🎯PySpark with Pandas UDFs 🎯Tips📕🐍 #python

Комментарии

0:03:45

0:03:45

0:18:40

0:18:40

0:03:53

0:03:53

0:08:52

0:08:52

1:49:02

1:49:02

0:58:16

0:58:16

0:12:47

0:12:47

1:28:40

1:28:40

0:22:47

0:22:47

0:07:19

0:07:19

0:24:42

0:24:42

0:11:32

0:11:32

0:10:47

0:10:47

0:24:14

0:24:14

0:28:53

0:28:53

0:20:14

0:20:14

0:40:06

0:40:06

0:02:39

0:02:39

0:21:26

0:21:26

0:37:22

0:37:22

0:00:24

0:00:24

0:32:26

0:32:26

0:01:54

0:01:54

0:00:38

0:00:38