filmov

tv

Contrastive Learning in PyTorch - Part 2: CL on Point Clouds

Показать описание

▬▬ Papers/Sources ▬▬▬▬▬▬▬

▬▬ Used Icons ▬▬▬▬▬▬▬▬▬▬

▬▬ Used Music ▬▬▬▬▬▬▬▬▬▬▬

Music from Uppbeat (free for Creators!):

License code: KJ7PFP0HB9BWHJOF

▬▬ Timestamps ▬▬▬▬▬▬▬▬▬▬▬

00:00 Introduction

00:22 Errors from last video

01:41 Notebook Setup [CODE]

02:42 Dataset Intro [CODE]

05:07 Augmentations and Bias

06:26 Augmentations [CODE]

09:12 Machine Learning on Point Clouds

11:48 PointNet

13:30 PointNet-pp

14:32 EdgeConv

15:53 Other Methods

16:09 Model Architecture

17:25 Model Implementation [CODE]

20:11 Training [CODE]

21:05 Batch sizes in CL

22:00 Training cont [CODE]

22:40 Batching in CL

23:15 Training cont [CODE]

24:08 Embedding evaluation

27:00 Outro

▬▬ Support me if you like 🌟

▬▬ My equipment 💻

▬▬ Used Icons ▬▬▬▬▬▬▬▬▬▬

▬▬ Used Music ▬▬▬▬▬▬▬▬▬▬▬

Music from Uppbeat (free for Creators!):

License code: KJ7PFP0HB9BWHJOF

▬▬ Timestamps ▬▬▬▬▬▬▬▬▬▬▬

00:00 Introduction

00:22 Errors from last video

01:41 Notebook Setup [CODE]

02:42 Dataset Intro [CODE]

05:07 Augmentations and Bias

06:26 Augmentations [CODE]

09:12 Machine Learning on Point Clouds

11:48 PointNet

13:30 PointNet-pp

14:32 EdgeConv

15:53 Other Methods

16:09 Model Architecture

17:25 Model Implementation [CODE]

20:11 Training [CODE]

21:05 Batch sizes in CL

22:00 Training cont [CODE]

22:40 Batching in CL

23:15 Training cont [CODE]

24:08 Embedding evaluation

27:00 Outro

▬▬ Support me if you like 🌟

▬▬ My equipment 💻

Contrastive Learning in PyTorch - Part 1: Introduction

Contrastive Learning in PyTorch - Part 2: CL on Point Clouds

Contrastive Learning with SimCLR | Deep Learning Animated

Contrastive Learning - 5 Minutes with Cyrill

Supervised Contrastive Learning

Contrastive learning explained | Ishan Misra and Lex Fridman

Implementation of Supervised Contrastive Learning

Contrastive Learning? MIT Short Answer

CLIP From Scratch 2: Custom Dataset and batch collator

MoCo (+ v2): Unsupervised learning in computer vision

TorchRL: The Reinforcement Learning and Control library for PyTorch

Overfitting test for deep learning in PyTorch Lightning

PyTorch vs TensorFlow | Ishan Misra and Lex Fridman

Akshita finds an issue in PyTorch #machinelearning #ai #podcast

Momentum Contrastive Learning

Can Contrastive Learning Work? - SimCLR Explained

Fixing SimCLRs Main Problem - BYOL Paper Explained

Contrastive Learning

Triplet Loss - Contrastive Learning

Contrastive learning in unsupervised learning with Prannay Khosla

Contrastive Learning in Machine Learning | E20

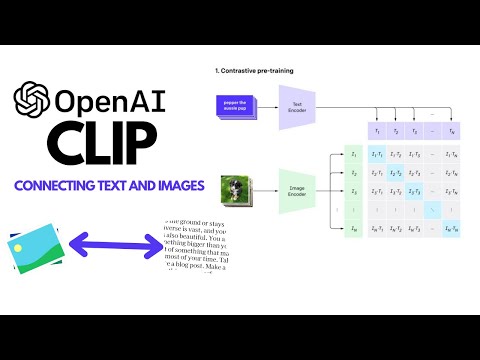

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning

SimCLR with PyTorch Lightning- intro

SimCLR - Implementation in PyTorch / PyTorch Lightning

Комментарии

0:14:21

0:14:21

0:27:20

0:27:20

0:14:57

0:14:57

0:05:24

0:05:24

0:30:08

0:30:08

0:04:24

0:04:24

0:04:58

0:04:58

0:04:48

0:04:48

0:49:05

0:49:05

0:31:03

0:31:03

0:10:55

0:10:55

0:00:59

0:00:59

0:03:47

0:03:47

0:00:35

0:00:35

0:19:51

0:19:51

0:09:35

0:09:35

0:12:18

0:12:18

0:50:14

0:50:14

0:00:38

0:00:38

0:48:49

0:48:49

0:01:00

0:01:00

0:32:00

0:32:00

0:00:54

0:00:54

0:25:45

0:25:45