filmov

tv

Python Programming Tutorial - 25 - How to Build a Web Crawler (1/3)

Показать описание

Python Programming Tutorial - 25 - How to Build a Web Crawler (1/3)

Python Tutorial 25 - Abstraction in Python

Python Tutorial #25 (deutsch) - Listen Slicing

Python Tutorial 25 - Nested while Loop

25 nooby Python habits you need to ditch

Python Tutorial for Beginners 25 - Python __init__ and self in class

Python Tutorial - Python Full Course for Beginners

Python Tutorial - 25. Command line argument processing using argparse

Why should I learn python?

#25 Python Tutorial for Beginners | Prime Number in Python

Operations on Tuples in Python | Python Tutorial - Day #25

The Ultimate 25 Minute Python Project!

New Python Coders Be Like...

Python for Beginners - Learn Python in 1 Hour

Python for Beginners – Full Course [Programming Tutorial]

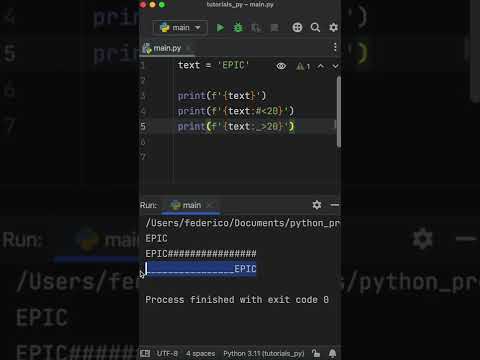

99% Of Python Programmers Never Learned THIS Feature

👩💻 Python for Beginners Tutorial

Python 3 Tutorial for Beginners #25 - Decorators

Not enough data for deep learning? Try this with your #Python code #shorts

Learn Python in Less than 10 Minutes for Beginners (Fast & Easy)

Learn Python - Full Course for Beginners [Tutorial]

Make Netflix Logo in Python

Escape sequence characters in Python | Python Tutorial #25

25. Decorators [Python 3 Programming Tutorials]

Комментарии

0:09:33

0:09:33

0:33:58

0:33:58

0:11:35

0:11:35

0:05:06

0:05:06

0:09:12

0:09:12

0:12:05

0:12:05

6:14:07

6:14:07

0:17:09

0:17:09

0:00:30

0:00:30

0:04:11

0:04:11

0:06:59

0:06:59

0:28:59

0:28:59

0:02:13

0:02:13

1:00:06

1:00:06

4:40:00

4:40:00

0:00:32

0:00:32

1:03:21

1:03:21

0:06:44

0:06:44

0:00:55

0:00:55

0:10:30

0:10:30

4:26:52

4:26:52

0:00:46

0:00:46

0:05:17

0:05:17

0:09:07

0:09:07