filmov

tv

286 - Object detection using Mask RCNN: end-to-end from annotation to prediction

Показать описание

Code generated in the video can be downloaded from here:

All other code:

This video helps you with end-to-end Mask RCNN project, all the way from annotations to training to prediction. Handling of VGG and Coco style JSON annotations is demonstrated in the video. Code is also made available for both approaches.

You can try other annotation tools like:

All other code:

This video helps you with end-to-end Mask RCNN project, all the way from annotations to training to prediction. Handling of VGG and Coco style JSON annotations is demonstrated in the video. Code is also made available for both approaches.

You can try other annotation tools like:

286 - Object detection using Mask RCNN: end-to-end from annotation to prediction

Mask R-CNN for Object Detection

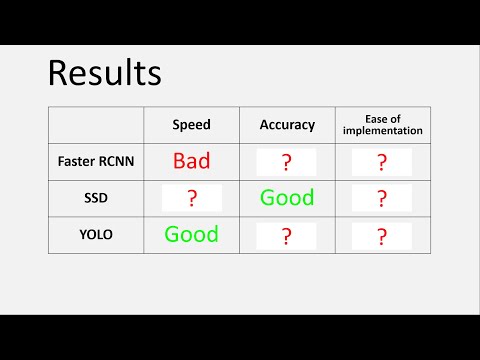

Object Detection best model / best algorithm in 2023 | YOLO vs SSD vs Faster-RCNN comparison Python

285 - Object detection using Mask RCNN (with XML annotated data)

MMDetection Pytorch Object Detection Toolbox Explanation and Python Demo #computervision

Train Mask R-CNN for Image Segmentation (online free gpu)

Mask R-CNN Object Segmentation in Python

COCO Dataset Structure | Understanding Bounding Box Annotations for Object Detection

Object Detection using PyTorch for images using Faster RCNN | PyTorch object detection in colab

Train custom object detector with Detectron2 | Computer vision tutorial

Object Detection using RCNN with Python Programming Language

MMDetection: Object Detection with PyTorch

Object Detection using the COCO dataset

SURGICAL INSTRUMENT DETECTION

Image Classification and Object Detection in R using just TWO lines of code!

Object detection with Machine Learning

Tensorflow 2 Custom Object Detection Model (Google Colab and Local PC)

Book Recognition With Mask RCNN (Multi Classes)

Instance Segmentation MASK R-CNN | with Python and Opencv

Faster-RCNN finetuning with PyTorch. Object detection using PyTorch. Custom dataset. Wheat detection

Modified Faster R-CNN model for Autonomous Driving's Object Detection

L-6 | Object Detection Using Faster-RCNN

Image tagging || Custom object Detection#dailyblogger #machinelearning

A case that shocked Canada in 2012😳 #shorts

Комментарии

0:27:45

0:27:45

0:09:45

0:09:45

0:05:07

0:05:07

0:27:01

0:27:01

0:10:47

0:10:47

0:34:22

0:34:22

0:22:20

0:22:20

0:09:51

0:09:51

0:16:33

0:16:33

0:32:26

0:32:26

0:05:46

0:05:46

1:19:32

1:19:32

0:00:42

0:00:42

0:00:22

0:00:22

0:04:59

0:04:59

0:01:24

0:01:24

0:56:47

0:56:47

0:00:27

0:00:27

0:49:26

0:49:26

0:16:44

0:16:44

0:01:58

0:01:58

0:16:09

0:16:09

0:00:16

0:00:16

0:00:14

0:00:14