filmov

tv

BIGGEST integer in Python!? #python #programming #coding #computerscience

Показать описание

Do you know what the largest integer in Python is? Hint: it's very, very big - probably bigger than you can imagine!

Subscribe & like for more coding, programming, and Python content!

Subscribe & like for more coding, programming, and Python content!

Finding Min & Max in the #list in #python

Python - Find The Largest Number In A List

Largest Number - Leetcode 179 - Python

Find 2nd largest value in given array- Interview Python Question

Frequently Asked Python Program 18:Find Smallest & Largest Numbers in a List

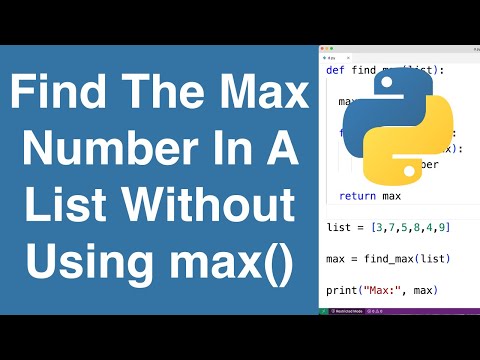

Find The Maximum Number In A List Without Using max() | Python Example

Python Program to Find the Largest and Smallest Element / Number Present in a List

Second Largest number in a Python List

2566. Maximum Difference by Remapping a Digit | Leetcode Daily - Python

Python Example Program to find the Largest among 3 numbers entered by the User

Kth Largest Element in a Stream - Leetcode 703 - Python

Maximum Number of Balloons - Leetcode 1189 - Python

A Simple Program to Find Greatest Among Two Numbers Using IDLE(Python )

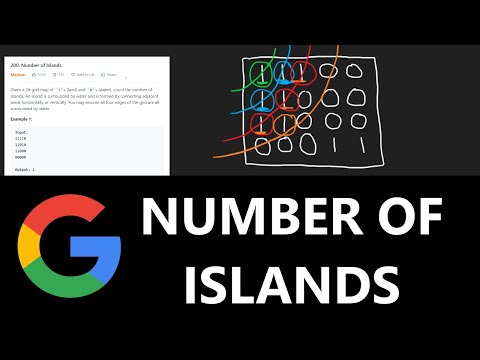

NUMBER OF ISLANDS - Leetcode 200 - Python

Frequently Asked Python Program 6: Find Maximum & Minimum Elements in an Array

That’s a big one #snake#youtubeshorts#florida#wildlife

QuickTip #161 - Python Tutorial - Largest Number In Array | Print | Max Value

Maximum Subarray - Amazon Coding Interview Question - Leetcode 53 - Python

1432. Max Difference You Can Get From Changing an Integer | Leetcode Daily - Python

Longest Substring Without Repeating Characters - Leetcode 3 - Python

Human Calculator Solves World’s Longest Math Problem #shorts

Can you even imagine 2^256?

Generate 5 different random integers in Python #Shorts

Eliminate Maximum Number of Monsters - Leetcode 1921 - Weekly Contest 248 - Python

Комментарии

0:00:23

0:00:23

0:04:09

0:04:09

0:10:17

0:10:17

0:00:27

0:00:27

0:05:27

0:05:27

0:05:23

0:05:23

0:04:24

0:04:24

0:07:33

0:07:33

0:05:02

0:05:02

0:06:59

0:06:59

0:11:18

0:11:18

0:07:03

0:07:03

0:04:51

0:04:51

0:11:41

0:11:41

0:08:08

0:08:08

0:00:11

0:00:11

0:00:30

0:00:30

0:08:03

0:08:03

0:06:16

0:06:16

0:06:46

0:06:46

0:00:34

0:00:34

0:01:00

0:01:00

0:00:15

0:00:15

0:08:19

0:08:19