filmov

tv

Is DeepFake Really All That? - Computerphile

Показать описание

How much of a problem is DeepFake, the ability to swap people's faces around? Dr Mike Pound decided to try it with colleague Dr Steve Bagley.

This video was filmed and edited by Sean Riley.

This video was filmed and edited by Sean Riley.

Is DeepFake Really All That? - Computerphile

Deepfake AI – How Dangerous Is It? #shorts #deepfake

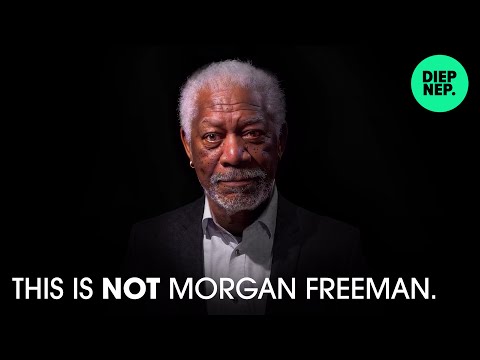

This is not Morgan Freeman - A Deepfake Singularity

Very realistic Tom Cruise Deepfake | AI Tom Cruise

TOM CRUISE & PARIS HILTON #deepfake #tomcruise #paris

How To Spot a Deepfake? Here are Two Simple Tricks #shorts #deepfake

Ultra realistic Deepfake of Elon Musk

Unmask The DeepFake: Defending Against Generative AI Deception

Experts Issue Deepfake Alert Ahead of Election Day | Fox 26 Houston

AI Deepfakes are getting scary… #ai #deepfake

How Can You Identify A Deepfake? Are All Deepfakes Scams? - Is It Fake? Part 1/2 | Talking Point

Is Elon Musk actually a deepfake?? #tech #shorts

What is a Deepfake?

What are deepfakes and are they dangerous? | Start Here

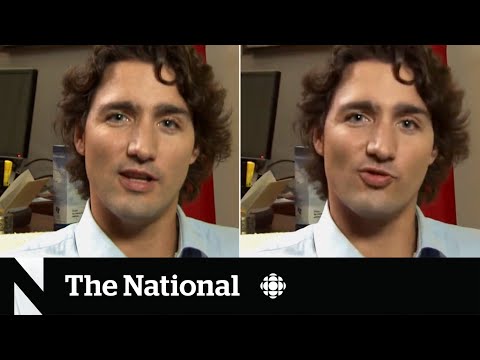

Can you spot the deepfake? How AI is threatening elections

Deepfake: Is it Dangerous? | Pros of Generative AI | Artificial Intelligence | Microsoft | #shorts

what would you do if you found deepfakes of yourself? #shorts #deepfake #nihachu

What is a deepfake?

RDJ threatens Hollywood

Beware of Deep Fake Propaganda #DeepFake #TrumpIndictment #Propaganda

Will deepfake technology destroy the world?🤯😩 #deepfake

I can't believe THIS Tom Cruise was a Deepfake A.I

I Challenged My AI Clone to Replace Me for 24 Hours | WSJ

What is deepfake?

Комментарии

0:12:30

0:12:30

0:00:39

0:00:39

0:01:04

0:01:04

0:01:38

0:01:38

0:00:36

0:00:36

0:00:42

0:00:42

0:00:15

0:00:15

0:14:46

0:14:46

0:03:57

0:03:57

0:00:29

0:00:29

0:23:21

0:23:21

0:00:30

0:00:30

0:00:55

0:00:55

0:07:45

0:07:45

0:07:08

0:07:08

0:01:00

0:01:00

0:00:52

0:00:52

0:00:17

0:00:17

0:00:49

0:00:49

0:00:59

0:00:59

0:00:53

0:00:53

0:00:34

0:00:34

0:07:34

0:07:34

0:00:44

0:00:44