filmov

tv

How We Scaled Bert To Serve 1+ Billion Daily Requests on CPU

Показать описание

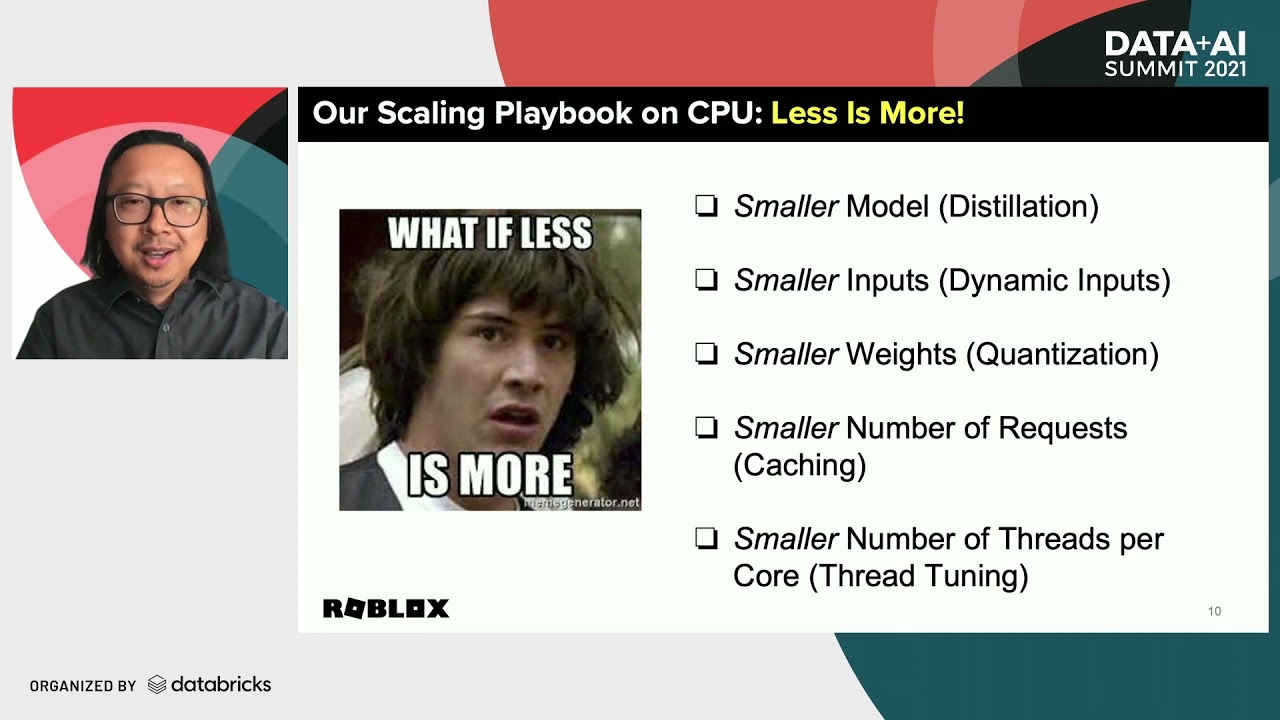

Roblox is a global online platform bringing millions of people together through play, with over 37 million daily active users and millions of games on the platform. Machine learning is a key part of our ability to scale important services to our massive community. In this talk, we share our journey of scaling our deep learning text classifiers to process 50k+ requests per second at latencies under 20ms. We will share how we were able to not only make BERT fast enough for our users, but also economical enough to run in production at a manageable cost on CPU.

Connect with us:

Connect with us:

How We Scaled Bert To Serve 1+ Billion Daily Requests on CPU

Unveiling the Cleverness of BERT: Scaling the Power of Language Models

Unlocking BERT: Scaling Intelligent Insights

Scaling BERT and GPT for Financial Services with Jennifer Glore - #561

Should you switch from BERT to ALBERT?

How to Compress Your BERT NLP Models For Very Efficient Inference

Faster & More Accurate BERT Models on CPUs

How ChatGPT Works Technically | ChatGPT Architecture

GPT & BERT Decode DNA: AI's Next Frontier? 🧬🤖

BERT: one NLP model to rule them all

Tutorial 5: BERT for Computational Social Scientists

BERT Can See Out of the Box

ZeRO & Fastest BERT: Increasing the scale and speed of deep learning training in DeepSpeed

DeBERTa: Decoding-enhanced BERT with Disentangled Attention (Machine Learning Paper Explained)

Natural Language Processing in Digital Content Webinar. Who’s BERT?

How To Train BERT 15x Faster | NLP Summit 2020

On-mobile real-time question answering using BERT 1

Deep Learning for NLP Lecture 09 - Transformers and BERT

Mike Lewis | Beyond BERT: Representation Learning for Natural Language at Scale

Graphcore at NeurIPS 2019 – Processing Large-Scale NLP Model BERT on IPU

Exploring German BERT model pre-training from scratch

Bing is Now Utilizing BERT at a Larger Scale Than Google via @MattGSouthern

Distilling BERT | Sam Sucik

Gordon Gibson at Ada Inc- Testing and Deploying BERT at Scale

Комментарии

0:16:37

0:16:37

0:00:38

0:00:38

0:00:17

0:00:17

0:44:19

0:44:19

0:18:36

0:18:36

0:44:45

0:44:45

0:42:35

0:42:35

0:07:54

0:07:54

0:19:39

0:19:39

0:38:54

0:38:54

1:00:16

1:00:16

0:11:50

0:11:50

1:05:10

1:05:10

0:45:14

0:45:14

0:44:13

0:44:13

0:34:36

0:34:36

0:00:59

0:00:59

1:02:25

1:02:25

1:00:01

1:00:01

0:03:27

0:03:27

0:09:23

0:09:23

0:02:02

0:02:02

0:22:19

0:22:19

0:24:54

0:24:54