filmov

tv

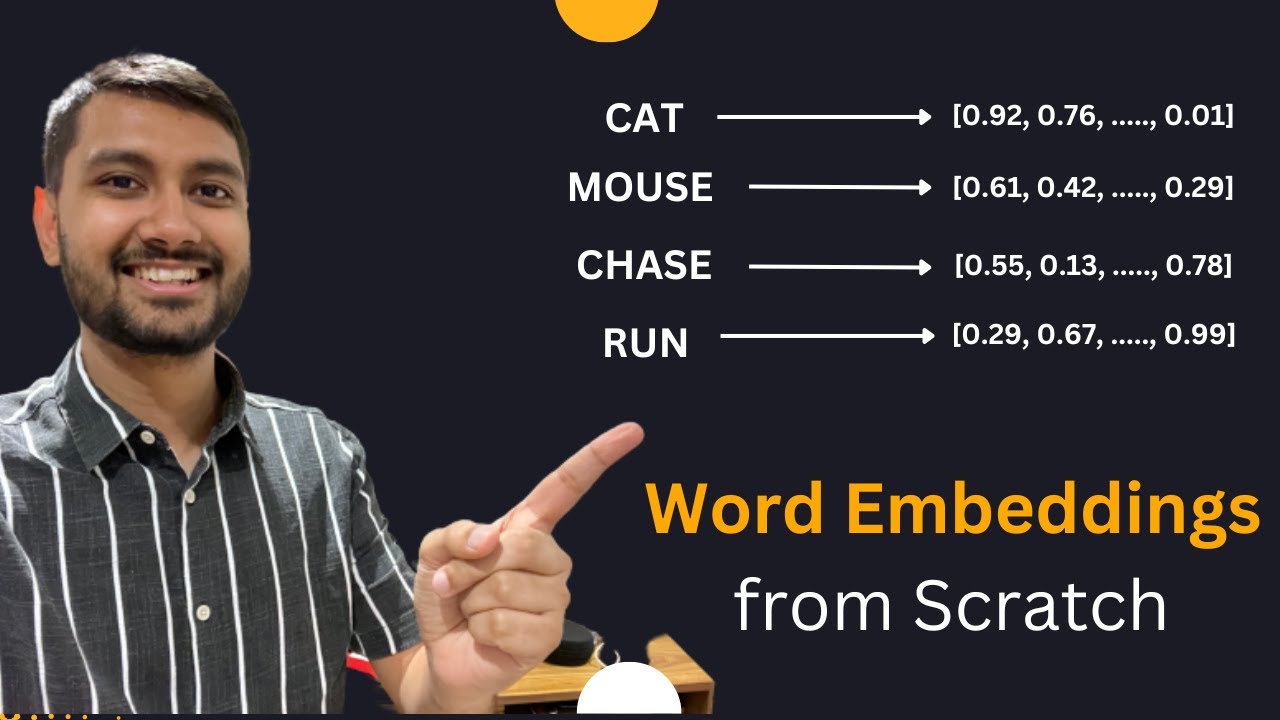

Word Embeddings from Scratch | Word2Vec

Показать описание

How do we represent word in a way that ML models can understand it. Word Embeddings is the Answer. There are many different ways of learning word embeddings such CBOW, Skipgram, Glove, etc.

In this video we, will build word2vec from scratch and train it on a small corpus.

Chapters:

0:00 Introduction

2:03 Distributional Hypothesis

3:51 Training data for Word2Vec

7:04 Model Architecture

10:59 Training word2vec

14:36 Visualising the Embeddings

17:22 Tips and Tricks for Word2Vec

#deeplearning #deeplearningtutorial #artificialintelligence #machinelearning #chatgpt #ai #ml #computerscience #decisiontrees #decisiontree #ml #ai #sklearn #machinelearningtutorial #machinelearningwithpython #machinelearningbasics #machinelearningalgorithm #scikitlearn #keras #tensorflow #pytorch #word2vec #skipgram #cbow #embeddings #wordembeddings #keras #python #python3 #pythonprogramming #pythonprojects #pythontutorial

In this video we, will build word2vec from scratch and train it on a small corpus.

Chapters:

0:00 Introduction

2:03 Distributional Hypothesis

3:51 Training data for Word2Vec

7:04 Model Architecture

10:59 Training word2vec

14:36 Visualising the Embeddings

17:22 Tips and Tricks for Word2Vec

#deeplearning #deeplearningtutorial #artificialintelligence #machinelearning #chatgpt #ai #ml #computerscience #decisiontrees #decisiontree #ml #ai #sklearn #machinelearningtutorial #machinelearningwithpython #machinelearningbasics #machinelearningalgorithm #scikitlearn #keras #tensorflow #pytorch #word2vec #skipgram #cbow #embeddings #wordembeddings #keras #python #python3 #pythonprogramming #pythonprojects #pythontutorial

Word Embedding and Word2Vec, Clearly Explained!!!

Word Embeddings from Scratch | Word2Vec

A Complete Overview of Word Embeddings

Vectoring Words (Word Embeddings) - Computerphile

Embeddings from Scratch!

Coding Word2Vec : Natural Language Processing

Part 2 | Python | Training Word Embeddings | Word2Vec |

Word Embedding in PyTorch + Lightning

Master RAG in 5 Hrs | RAG Introduction, Advanced Data Preparation, Advanced RAG Methods, GraphRAG

Word Embeddings || Embedding Layers || Quick Explained

TRAIN WORD EMBEDDINGS ON OWN DATASET. TENSORFLOW EMBEDDING. W2VEC MODEL FROM SCRATCH. NLP PRACTICE

Word Embeddings, Word2Vec And CBOW Indepth Intuition And Working- Part 1 | NLP For Machine Learning

OpenAI Embeddings and Vector Databases Crash Course

Pre Trained Word Embeddings | Word2Vect, GloVe

Training Your Own Word Embeddings With Keras

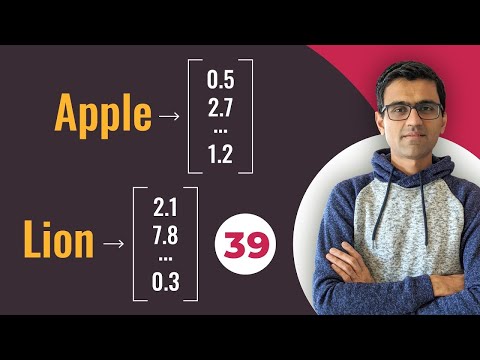

Converting words to numbers, Word Embeddings | Deep Learning Tutorial 39 (Tensorflow & Python)

What is Word2Vec? A Simple Explanation | Deep Learning Tutorial 41 (Tensorflow, Keras & Python)

Let's build GPT: from scratch, in code, spelled out.

How to train word embeddings using the WikiText2 dataset in PyTorch

Word2Vec : Natural Language Processing

Lecture 2 | Word Vector Representations: word2vec

Vector Embeddings Tutorial – Code Your Own AI Assistant with GPT-4 API + LangChain + NLP

12.1: What is word2vec? - Programming with Text

Word Embeddings - EXPLAINED!

Комментарии

0:16:12

0:16:12

0:19:55

0:19:55

0:17:17

0:17:17

0:16:56

0:16:56

0:18:00

0:18:00

0:07:59

0:07:59

0:24:28

0:24:28

0:32:02

0:32:02

4:40:32

4:40:32

0:02:09

0:02:09

0:23:05

0:23:05

0:47:45

0:47:45

0:18:41

0:18:41

0:07:57

0:07:57

1:04:38

1:04:38

0:11:32

0:11:32

0:18:28

0:18:28

1:56:20

1:56:20

0:29:52

0:29:52

0:13:17

0:13:17

1:18:17

1:18:17

0:36:23

0:36:23

0:10:20

0:10:20

0:10:06

0:10:06