filmov

tv

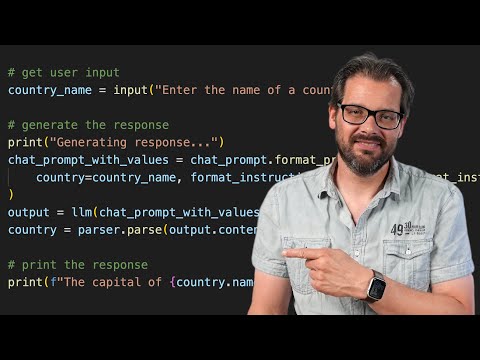

Python Langchain Tutorial: Use 3 Different LLMs in 10 Mins

Показать описание

Learn how to easily switch between LLMs in Langchain for your Python applications: OpenAI's GPT-4, Amazon Bedrock (Claude V2), and Google Gemini Pro.

Or if none of these suit your needs, you can also implement your own interface.

📚 Chapters

00:00 Introduction

01:43 Getting Started

03:18 Creating the Base App

05:33 How To Use OpenAI GPT-4

07:42 How To Use Claude/Llama2 (via AWS)

09:33 How To Use Google Gemini Pro

10:27 Custom LLMs

👉 Links

Or if none of these suit your needs, you can also implement your own interface.

📚 Chapters

00:00 Introduction

01:43 Getting Started

03:18 Creating the Base App

05:33 How To Use OpenAI GPT-4

07:42 How To Use Claude/Llama2 (via AWS)

09:33 How To Use Google Gemini Pro

10:27 Custom LLMs

👉 Links

Python Langchain Tutorial: Use 3 Different LLMs in 10 Mins

LangChain is AMAZING | Quick Python Tutorial

LangChain Explained In 15 Minutes - A MUST Learn For Python Programmers

Prompt Template in Langchain | Complete Python LangChain Course for Beginners | Tutorial 3

LangChain Basics Tutorial #2 Tools and Chains

Python RAG Tutorial (with Local LLMs): AI For Your PDFs

RAG + Langchain Python Project: Easy AI/Chat For Your Docs

Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

build voice driven email assistant using Langchain|Tutorial:108

Python AI Agent Tutorial - Build a Coding Assistant w/ RAG & LangChain

Chat with Multiple PDFs | LangChain App Tutorial in Python (Free LLMs and Embeddings)

LLM Chains using GPT 3.5 and other LLMs — LangChain #3

LangChain explained - The hottest new Python framework

How to Build a Free ChatBot Using LLAMA-3 || Langchain || Python

LangChain - Using Hugging Face Models locally (code walkthrough)

Пример ИИ приложения на Python, LangChain и ChatGPT (OpenAI API)

Chat with MySQL Database with Python | LangChain Tutorial

Using ChatGPT with YOUR OWN Data. This is magical. (LangChain OpenAI API)

LangChain In Action: Real-World Use Case With Step-by-Step Tutorial

Hugging Face + Langchain in 5 mins | Access 200k+ FREE AI models for your AI apps

AI Secretary Agent built with Python/Langchain and 3 powerful AI Prompts | Full Tutorial

LangChain Tutorial (Python) #3: Output Parsers (String, List, JSON)

LangChain Tutorial (Python) #1: Intro & Setup

Build Your Own Auto-GPT Apps with LangChain (Python Tutorial)

Комментарии

0:11:35

0:11:35

0:17:42

0:17:42

0:15:39

0:15:39

0:06:28

0:06:28

0:17:42

0:17:42

0:21:33

0:21:33

0:16:42

0:16:42

2:33:11

2:33:11

0:54:08

0:54:08

0:48:32

0:48:32

1:07:30

1:07:30

0:16:38

0:16:38

0:03:03

0:03:03

0:39:31

0:39:31

0:10:22

0:10:22

0:15:38

0:15:38

0:37:11

0:37:11

0:16:29

0:16:29

0:12:17

0:12:17

0:09:48

0:09:48

0:11:25

0:11:25

0:12:51

0:12:51

0:09:58

0:09:58

0:29:44

0:29:44